The Fresh Protocol

Version 3.0

Author Devon Shigaki

Copyright © All Rights Reserved April 18, 2024

// THE FRESH PROTOCOL

Abstract

Centralization of consumer data has led to corporate hacks, creating an environment for fraud, leading to inaccurate and unverified credit data. This security issue can quickly become a class-action lawsuit if bad enough, resulting in a more fragmented data pool. Fragmented data creates a market of competition instead of innovation, and innovation without adoption is only invention. Companies cannot adopt better ideas and policies if data sources become even more siloed off, but we cannot have companies harvest user data for profits at the risk of the consumer. We have a solution.

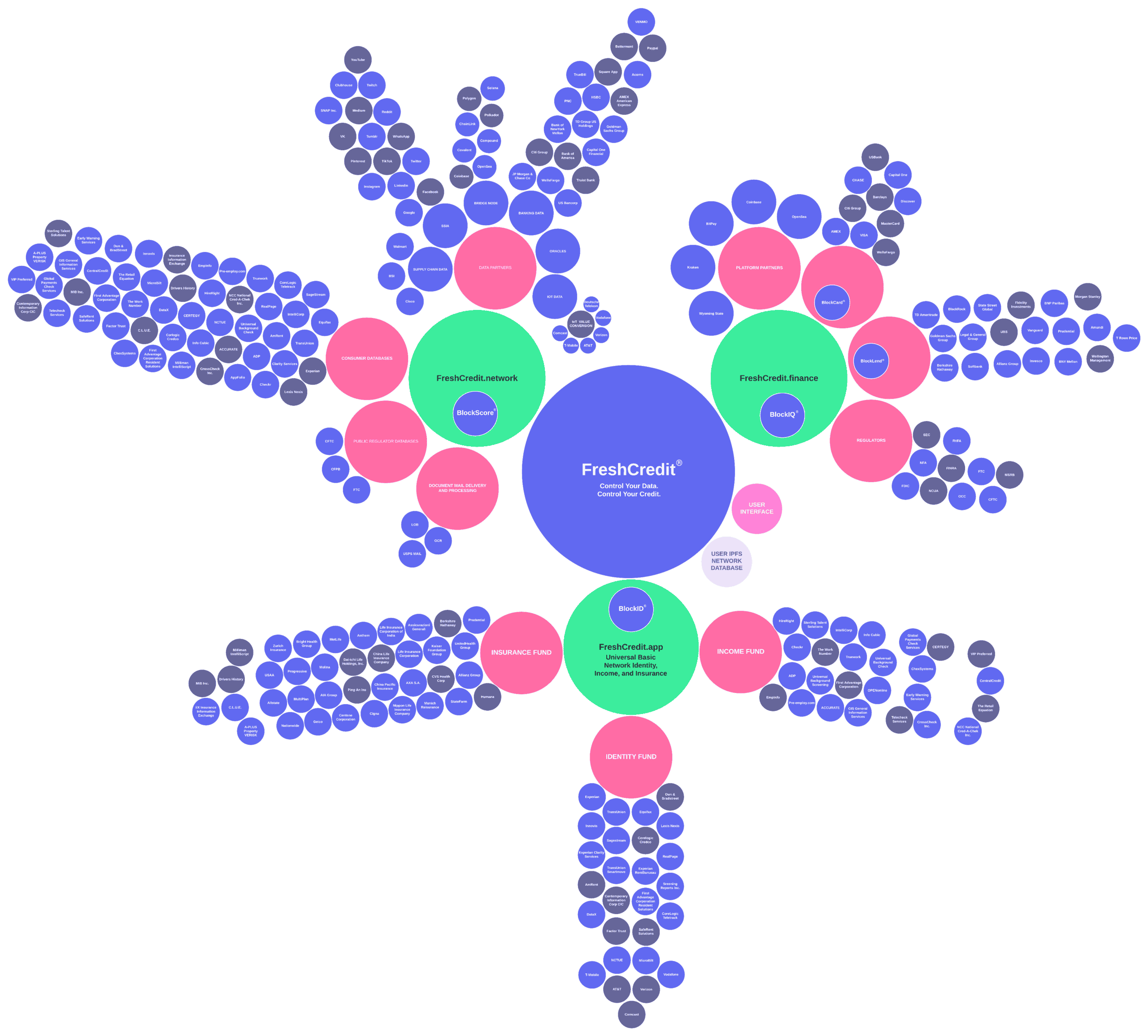

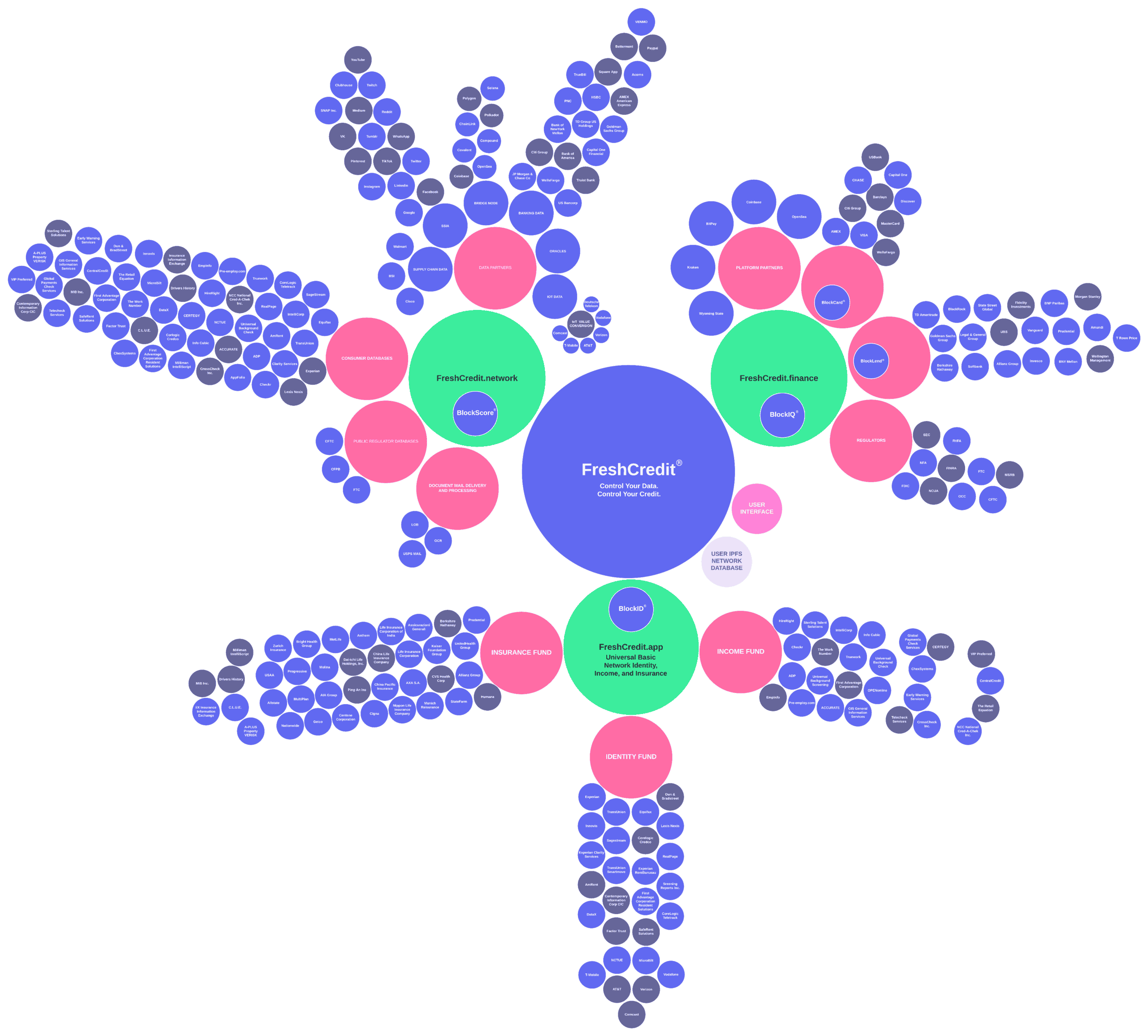

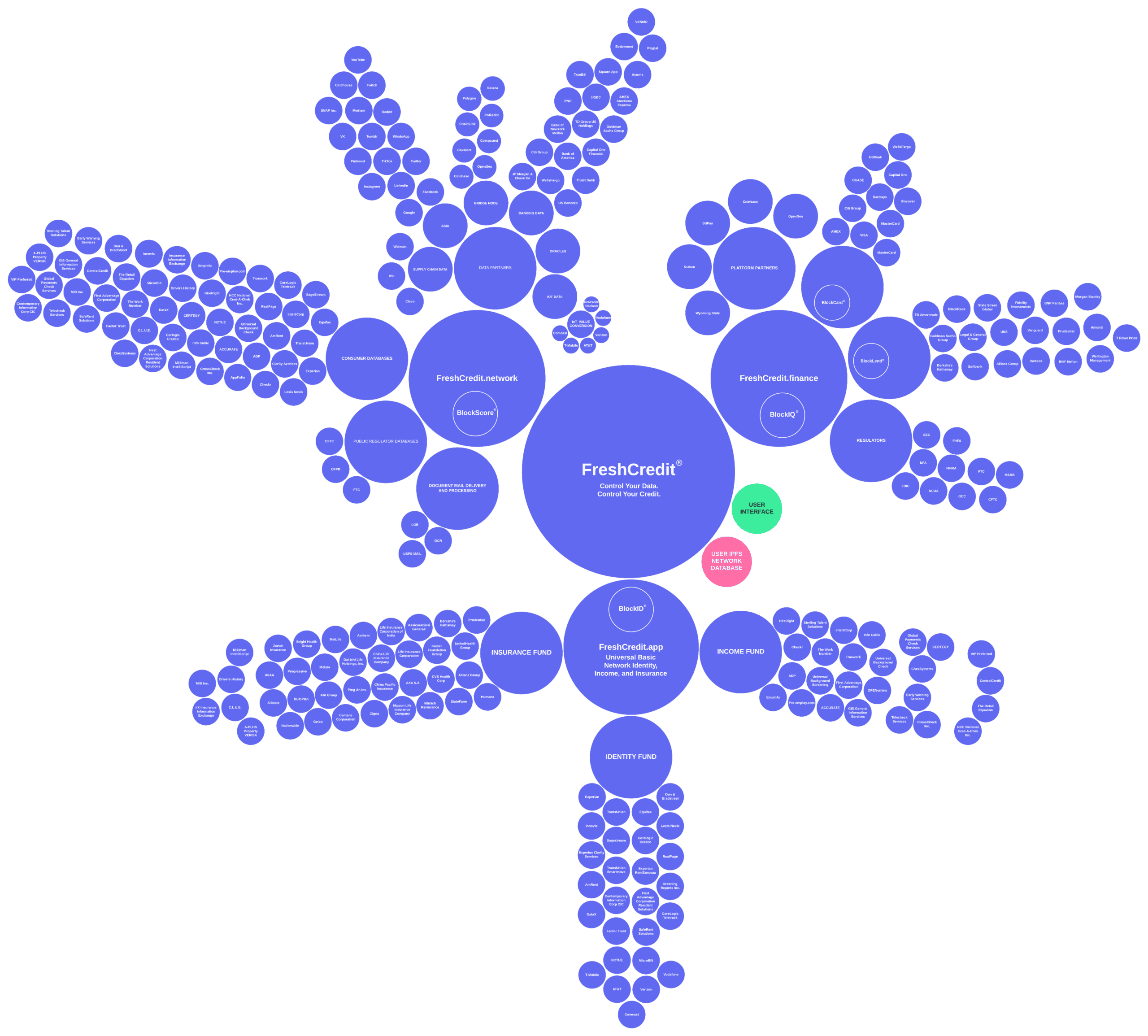

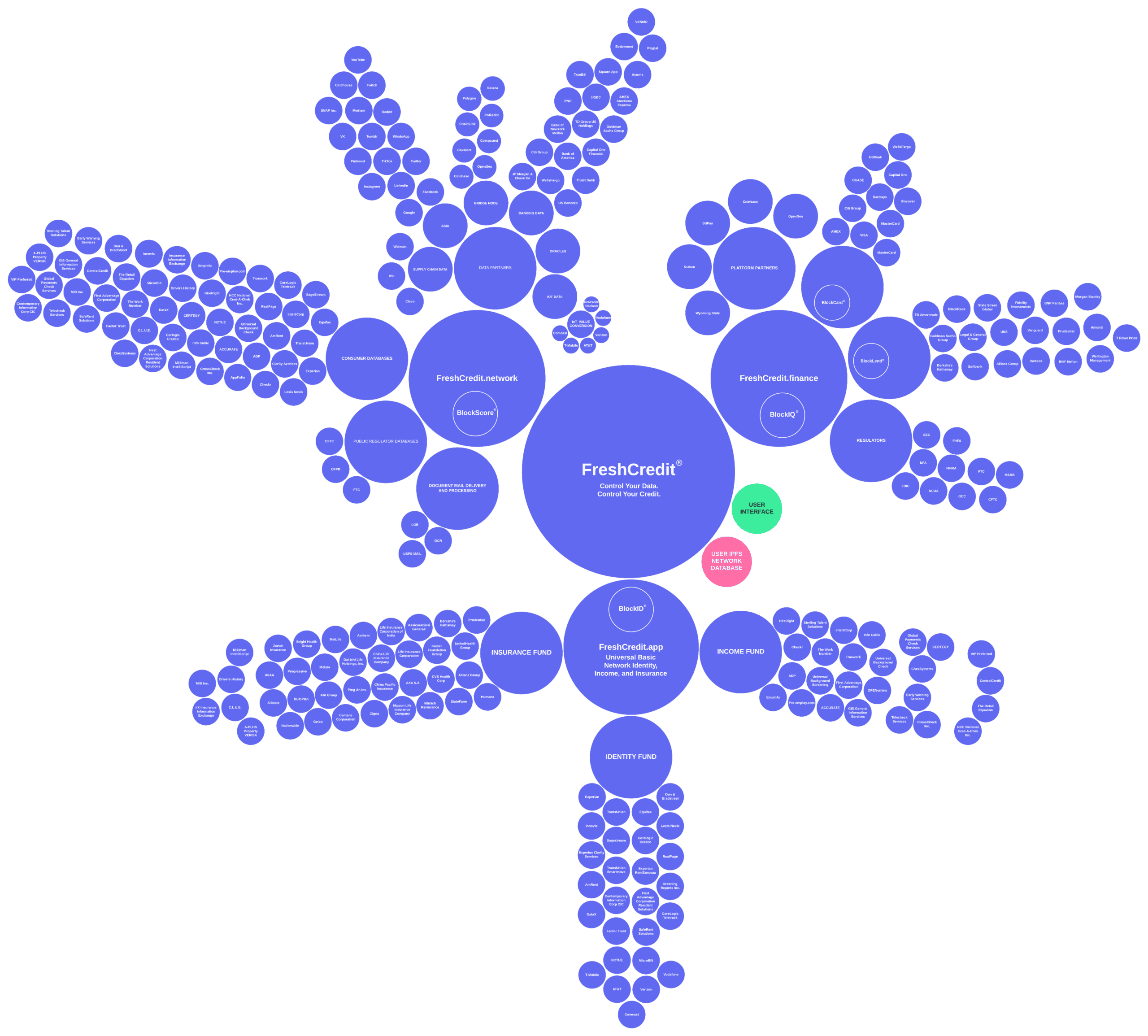

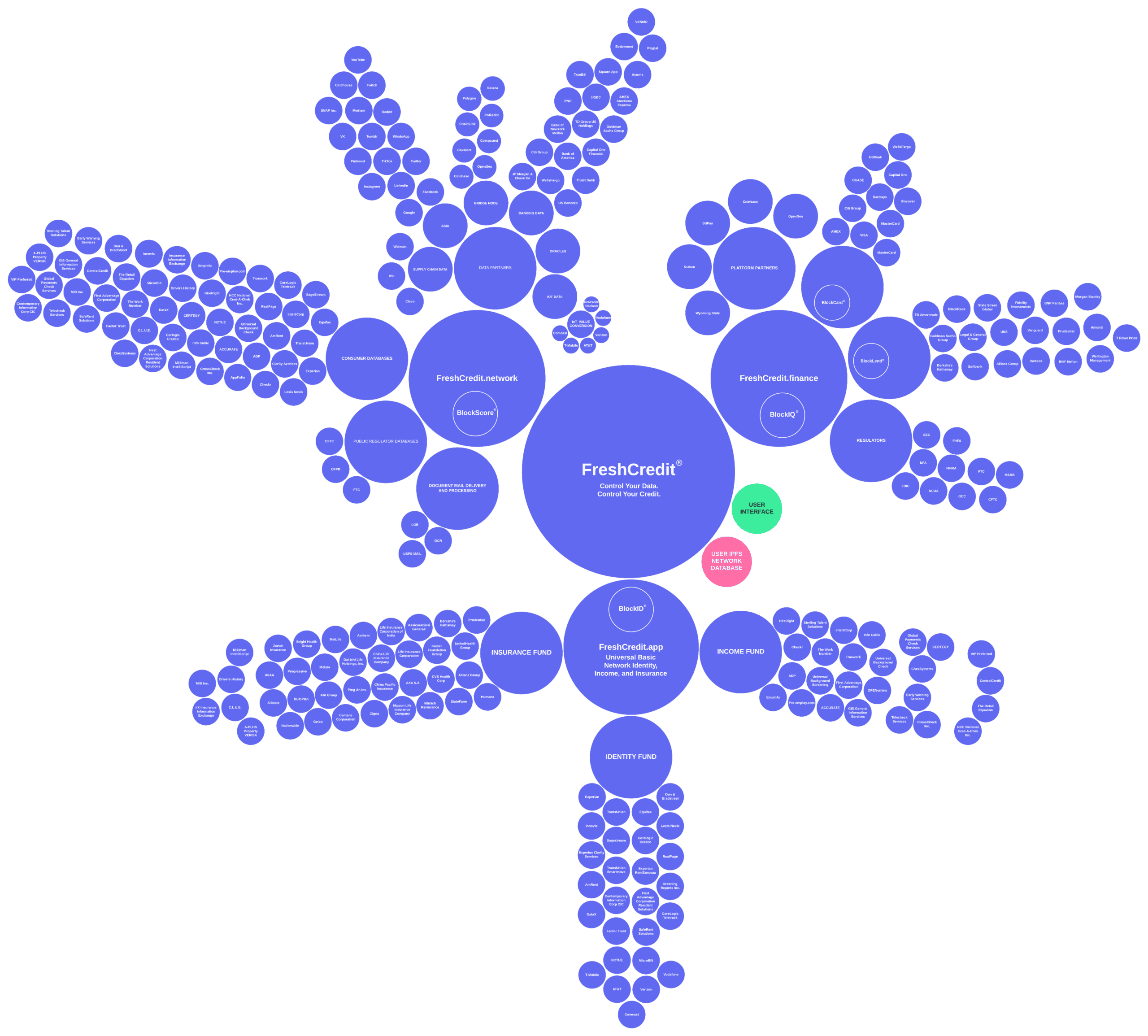

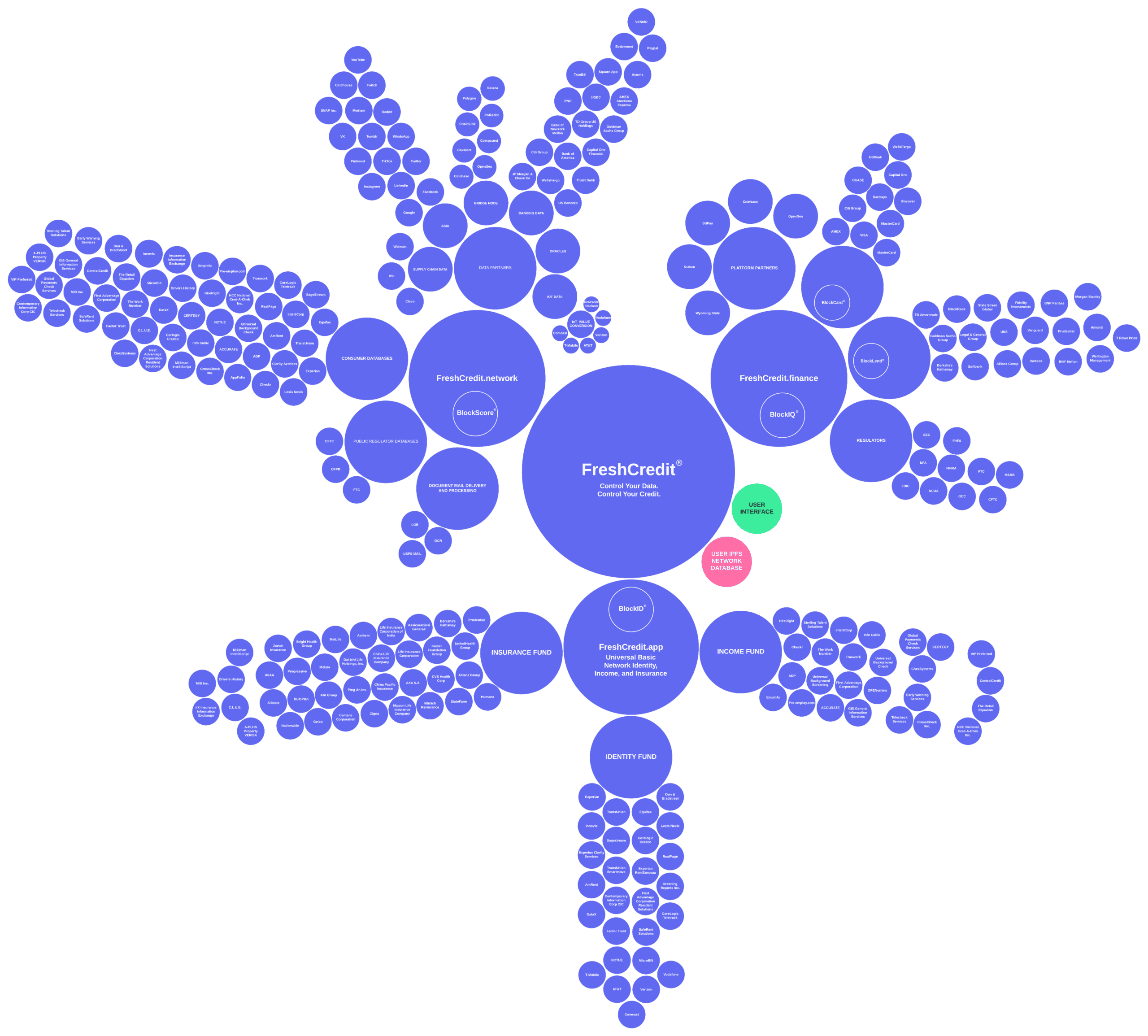

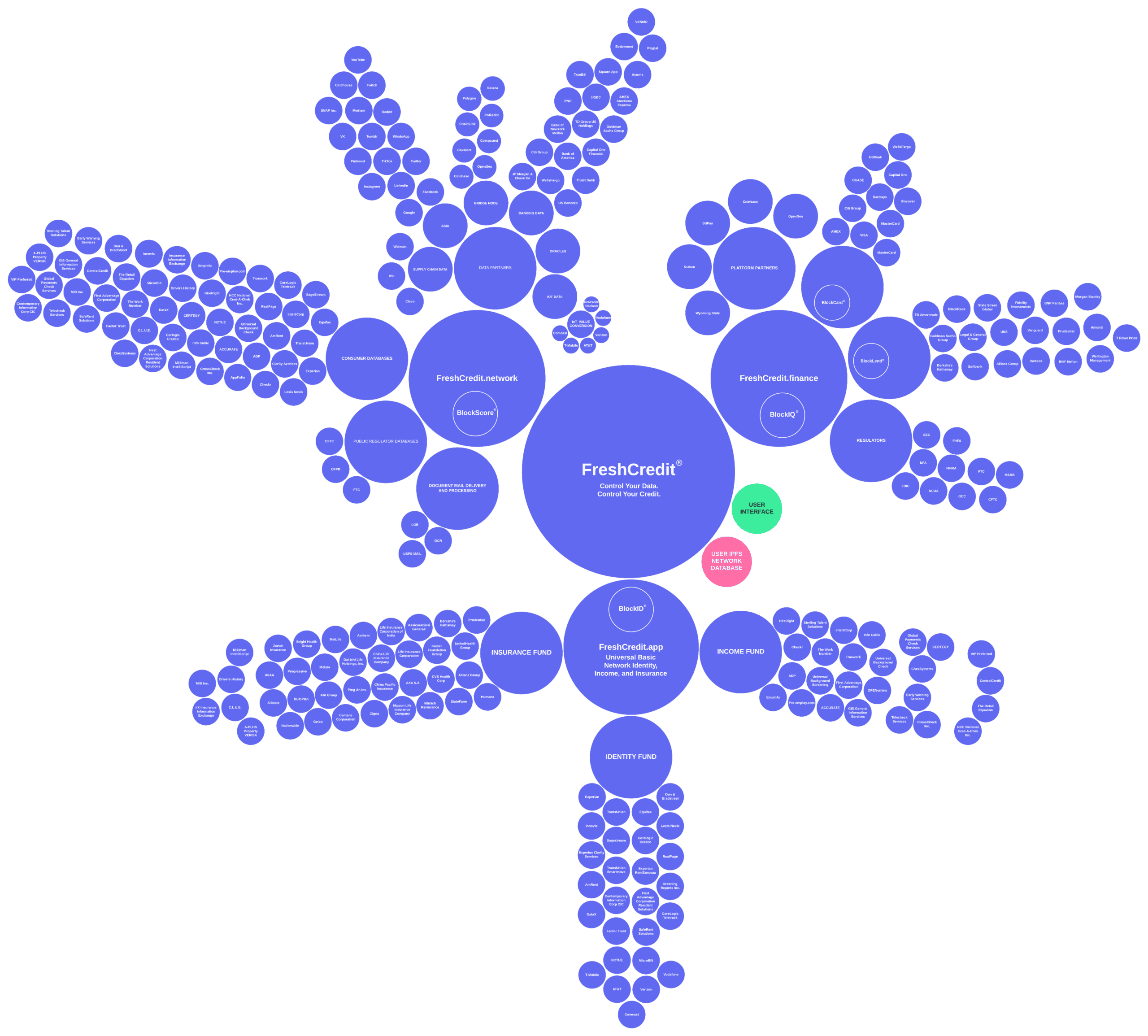

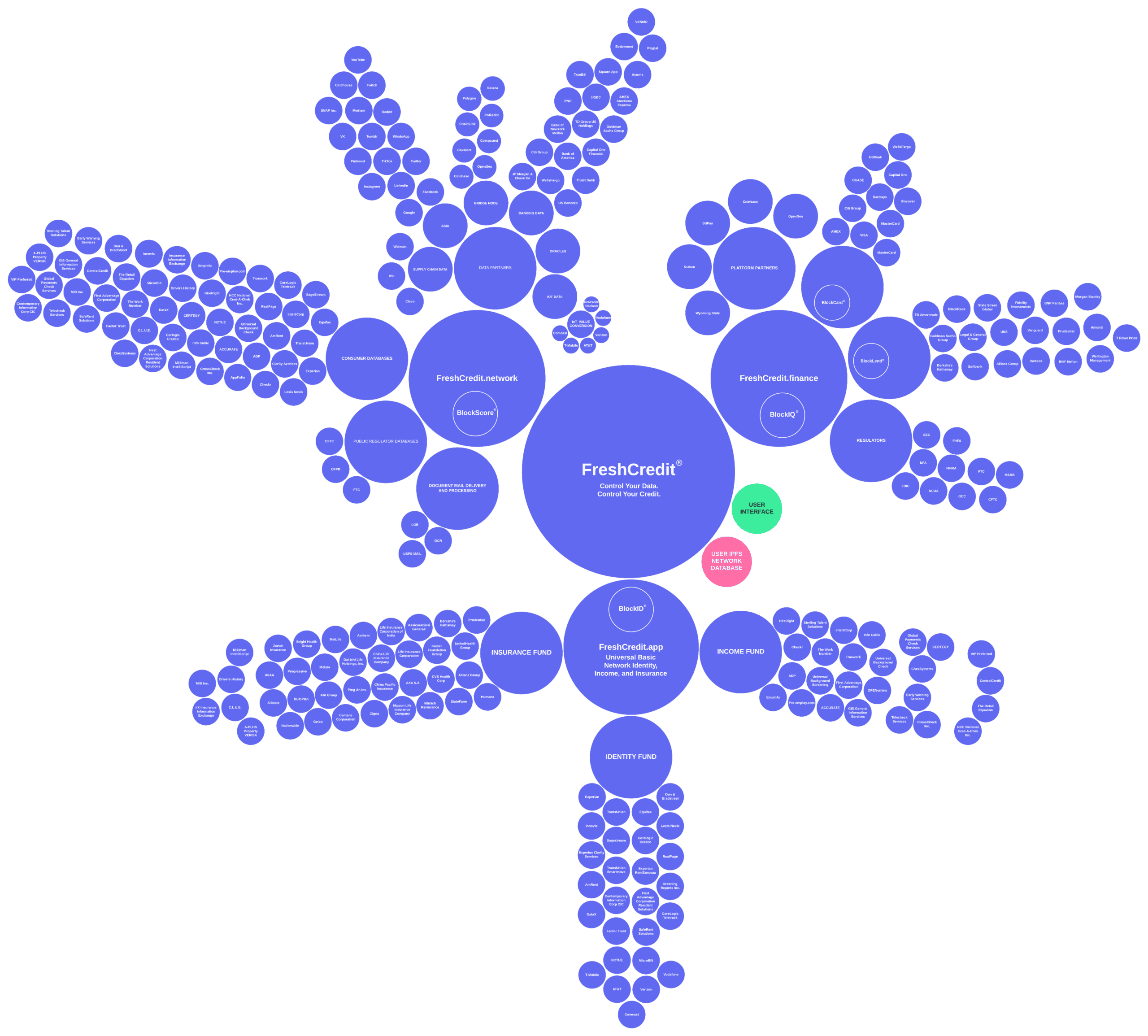

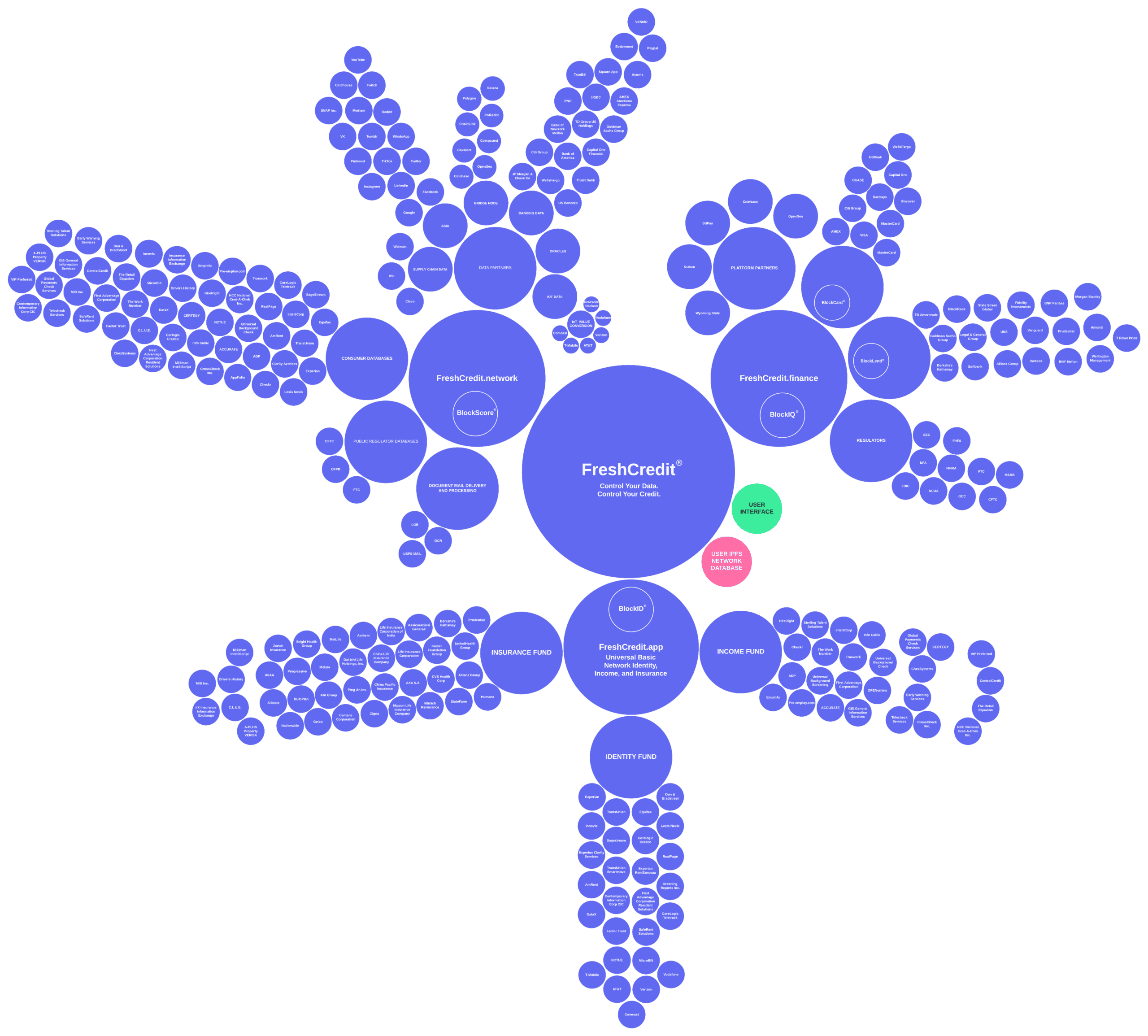

This whitepaper will introduce a global, decentralized credit scoring and lending protocol. This protocol addresses these concerns and limitations by moving the credit reporting and scoring process onto the blockchain. The Fresh Protocol is designed to provide a standardized and composable credit reporting and scoring protocol to simultaneously reduce consumer and business risk, scale the benefits of credit scoring globally, and interoperate with decentralized and centralized applications.

The Fresh Protocol is a programmable ecosystem that allows both fiat and digital asset lenders to issue compliant loans utilizing a standardized modular framework that is a compliant and composable risk assessment process deployable on a global scale while increasing the competition to lower fees and improve the borrower experience reducing the barrier for entry.

The Fresh Protocol provides solutions for some of the most complex problems currently thwarting global economic growth and commerce. Issues include cross-border credit scoring, credit drift, regulatory compliance, identity theft, defaults, and consumer entry barriers because of a lack of financial data and credit scoring infrastructure.

// THE FRESH PROTOCOL

Table of Contents

Abstract

// 1

// 2

// 7

// 8

// 9

// 10

// 11

// 12

// 13

// 14

The Specific Industry Problem

Why Smart Contracts Won't Work?

Why Homogenous Layer 2 Solutions Won't Work?

***Add TECHNICAL PAPER - The Problem, Why Substrate, Security, Incentive

// 15

// 16

// 17

// 18

What Is The Substrate Blockchain?

How Do Parachains Scale?

// 19

// 20

User Privacy

Control of Data

Data Ownership

// 21

// 22

// 23

Sovereign Financial Identity and Data Control

Identity Validators

Data Reporting Format

Sharing Data

Tamper-Proof Reporting

Implementing FCRA Compliance

Decentralized Cloud Storage

Handling Bad Actors and Spam Accounts Efficiently and Organically

// 24

// 25

// 26

// 27

// 28

// 29

// 30

// 31

Centralized Financial Data - PLAID

Liquidity – Cash Reserve - PLAID

Physical Assets & Traditional Investments - PLAID

// 32

// 33

// 34

Experian, Transunion, Equifax, D&B, Schufa, Circulo de Credito

Payment History

Loan Activity

Total Debt

// 35

// 36

// 37

// 38

USPS Mail System

Academics

USPTO Database

CFPB Database

CFTC Database

Medical Records

Public Court Records

// 38

// 39

// 40

// 41

// 42

// 43

// 44

Meta

// 45

// 46

// 47

// 48

Cryptocurrencies

Non Fungible Tokens

Metaverse

IoT Devices

// 49

// 50

// 51

// 52

A Fair Compatible Secure Network

An Inclusive Composable Modular Framework

A Transparent Compliant Scalable Infrastructure

// 53

// 54

// 55

Public Data Sources

Decentralized Data - DKGs Decentralized Knowledge Graphs, Chain Analysis

Centralized Data - Public Oracles - Economic Activity (Regulatory Scores, Indices, Supply chain Data, Security Exploits, Network Outages, Financial Markets, Social Opengraphs)

// 56

// 57

// 58

What Is a Good Score?

Crypto Wallet (score cap)

Crypto Wallet w/ Social KYC (score cap increase) optional

Crypto Wallet w/ Financial KYC (score cap lifted) optional

What Generates A Bad Score?

// 59

// 60

// 61

// 62

// 63

Scoring Factors

Reliability Score – Consumer Behavior

Financial Score – Financial Data

Social Score – Public Identity

What Generates A Bad Score?

Public Score - Oracle Data

Broadcast Set of Storage Identifiers to Network of Nodes - Network - TECHNICAL PAPER

// 64

// 65

// 66

// 67

// 68

// 69

// 70

Misunderstanding Data

Data Fragmentation

Ethical Data Practices

// 71

// 72

// 73

What Is the BlockIQ API?

Highest-Quality First Person Data

Regulatory Pathway

// 74

// 75

// 76

Utility

Unit of Account

Remittance and Settlement

Transaction Bridge

Network Rewards

Stimulative Model – No Cap

Organic Growth of Network Data

Compliance – Located In Wyoming

Share Data - Request access to consumer and/or business data OR a particular consumer and/or business associated with a specific individual, organization, or node associated to the original user - API - TECHNICAL PAPER

// 78

// 79

// 80

// 81

// 82

// 83

// 84

// 85

// 86

Efficiently Manage Risk and Liability

Shared Network Security

Compliance and Transparency

// 87

// 88

// 8

The Value of BigData

Leveraging the Value of Big Data

Autonomous Lending Pool

// 91

// 92

// 93

Stimulating Real vs. Financial GDP

Default Risk Parameters – Securing The Network

Staking and Lending – Earn Tokens

Staking and Borrowing – Burn Tokens

***Grant Consumer Credit - DAO - TECHNICAL PAPER

Poverty and Violent Crime

Ending Poverty Together

// 92

// 93

// 94

// 95

// 96

// 97

// 98

// 99

Roadmap

Sources and Credits

Glossary

Disclaimer

// THE FRESH PROTOCOL

Background

Consumer Problems

Control and Privacy of Personal Data

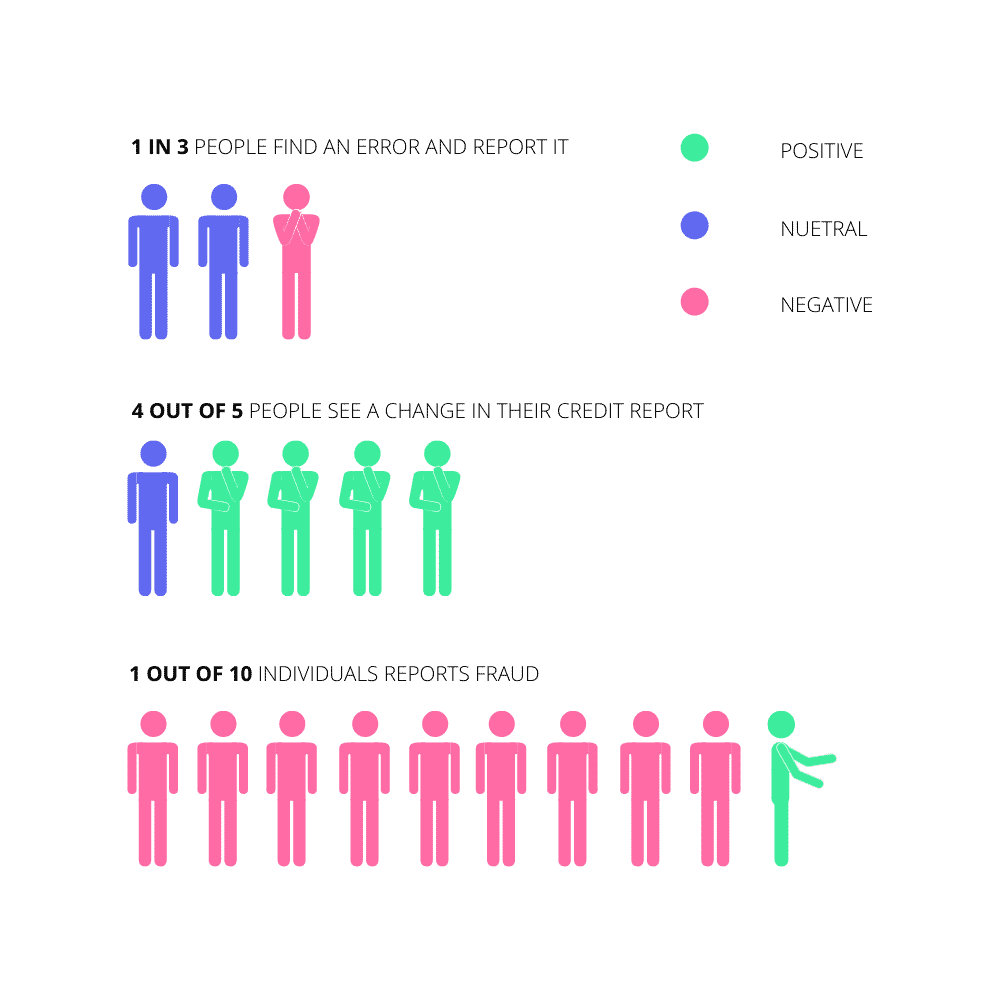

The FTC estimates that around 32% of all US credit reports contain at least one error negatively affecting consumers’ credit scores. These issues w/ our current credit reporting, verifying, and scoring protocols have stemmed back to the 1700s. One of the original purposes of a ledger was recording critical financial data and assessing individuals’ credibility using the ledger as a reference. Then bureaus began to form, collecting information allowing organizations to understand consumers’ financial position. These organizations eventually began having significant problems stemming from heated issues regarding sexism, racism, and invasion of personal privacy.

This led to the creation of the credit scoring system known as FICO. In 2015 the US declared credit scoring a monopoly controlled by a single organization, FICO, providing credit scoring for more than 90% of the top US lenders. The FICO credit scoring system currently leaves 26 million Americans’ credit invisible and another 19 million unscorable. With the rise of the digital age, the amount of data available has gone parabolic, along with ways to misuse it. A study by Penn State noted multiple ways in which social media data may already be misused for credit scoring in the future if unchecked. Current credit scoring providers cannot operate globally due to a vast majority of cultural, economic, and regulatory compliance differences and requirements. Every country has its own culture, and our culture forms how we think about, spend, invest, manage, and utilize our wealth and credit. Copy-pasting models directly from matured countries like the US or China will not work.

// THE FRESH PROTOCOL

Enterprise Problems

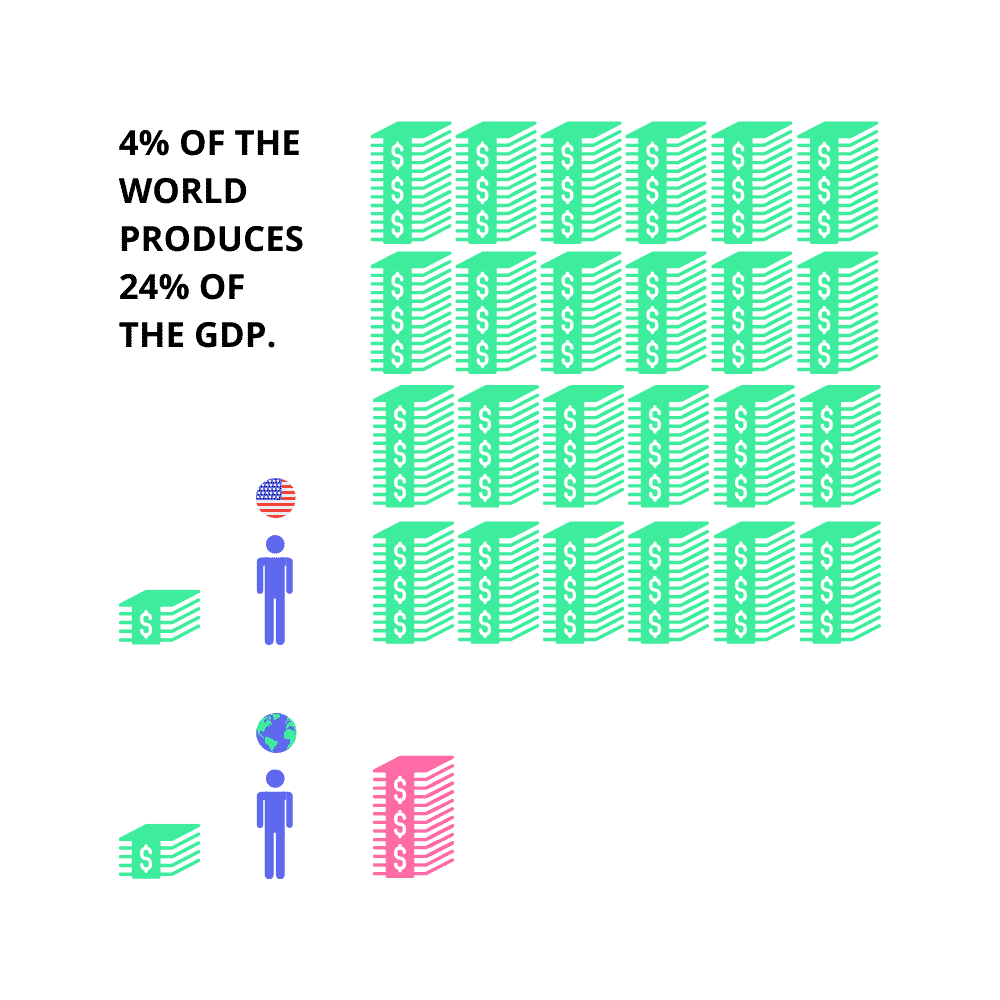

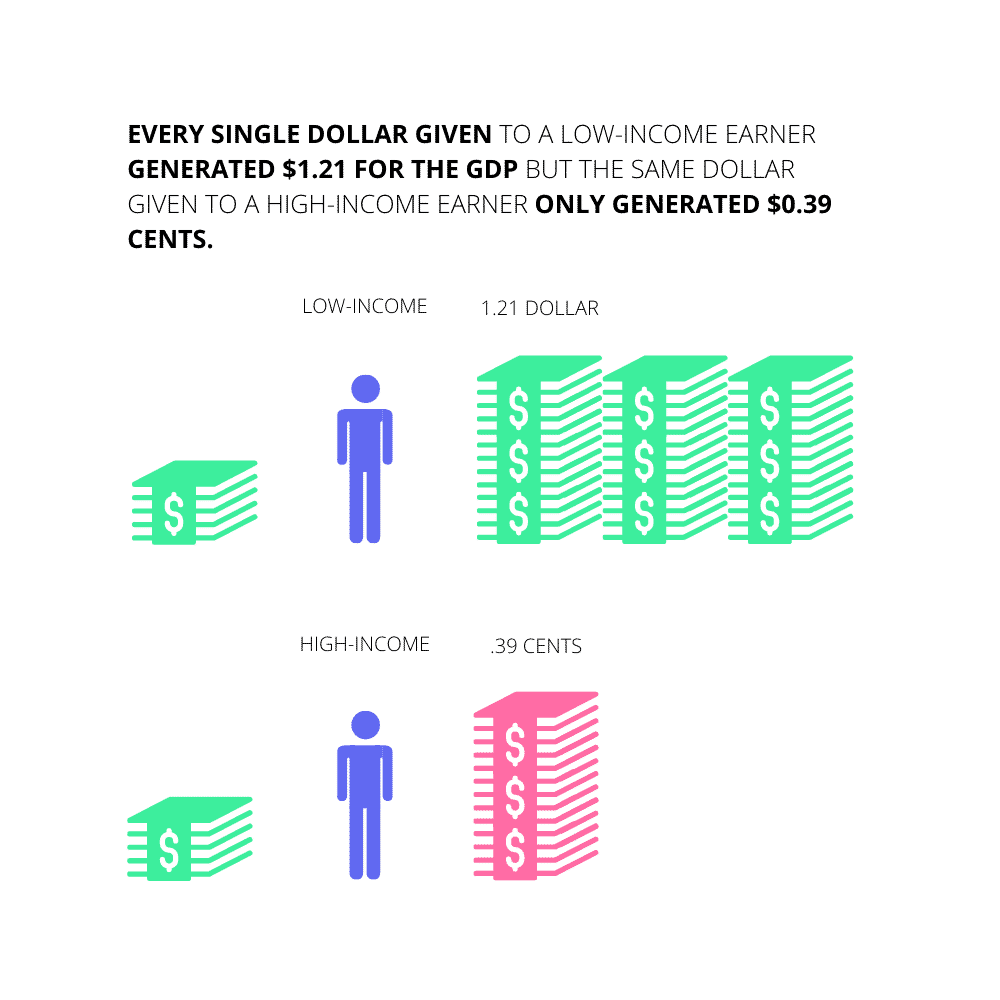

A Fair and Inclusive Scoring System

Even with all these issues currently plaguing the United States credit system, we have still managed to become the most powerful financial country on the planet through the deployment of leveraged revolving debt (credit and credit cards). The US alone generates more than 24% of the total GDP using the power of revolving leveraged debt. Most of this spending power is based on risk assessment protocols powered by credit scores, yet the US is only for 4% of the global population. This means we are possibly excluding millions if not billions of creditworthy individuals from the worldwide economy, which could potentially represent hundreds of trillions of dollars added to the total GDP.

// THE FRESH PROTOCOL

Regulator Problems

A Safe and Private Network

Regulators can't enforce global standards efficiently in a rapidly shifting landscape as the entire capital market economy becomes digital.

The SEC's official position is that the credit scoring industry does not need more oversight or regulation. In summary, they would like credit scoring to be as decentralized and transparent as possible, never to cause the public to think the government incentivizes the scoring system providers in any capacity.

1* SEC Statement

The very idea credit scores could be manipulated puts at risk the integrity of the entire global bond market and thus the world economy. The CFPB stated that fair alternative scores are not only legal but encouraged to increase financial inclusiveness and economic exposure. This hands-off approach allows businesses and developers to build and expand into other markets with better technology without creating unnecessary regulatory concerns.

2* CFPB Position on Alternative Score

// THE FRESH PROTOCOL

Institutional Problems

Access to Financial Tools

The significant benefits of financial identity and modern credit scoring are not for everyone. Globally billions of individuals are unbanked. Deloitte stated that the penetration of internet access had gained significant traction in the past few years due to mobile smartphones. Before wifi, running physical network lines through certain areas was very difficult. Even if an individual has internet access, many cannot obtain access to financial institutions. According to a study done by Stanford University, one of the main reasons lenders failed to offer to lend to sub-prime borrowers was because of incomplete data, leaving billions more unable to utilize a modern credit scoring system to borrow needed funds. A second study conducted at Standford revealed the direct effect of implementing credit scoring increased the down payments more than 40%, significantly increased overall loan size, and reduced defaults by 15%. Globally over 3 billion people cannot obtain a credit card, and over 90% of the population in developing nations find it difficult to find debt financing options.

- The Unbanked – Deloitte*

- Liquidity Constraints for Subprime Lending w/ Imperfect Information – Stanford*

- Credit Data – Modern Credit Scoring Affect On Lending Down Payments and Defaults- Stanford*

- Defaults 35% of loans default in the 1st year*

// THE FRESH PROTOCOL

Market Overview

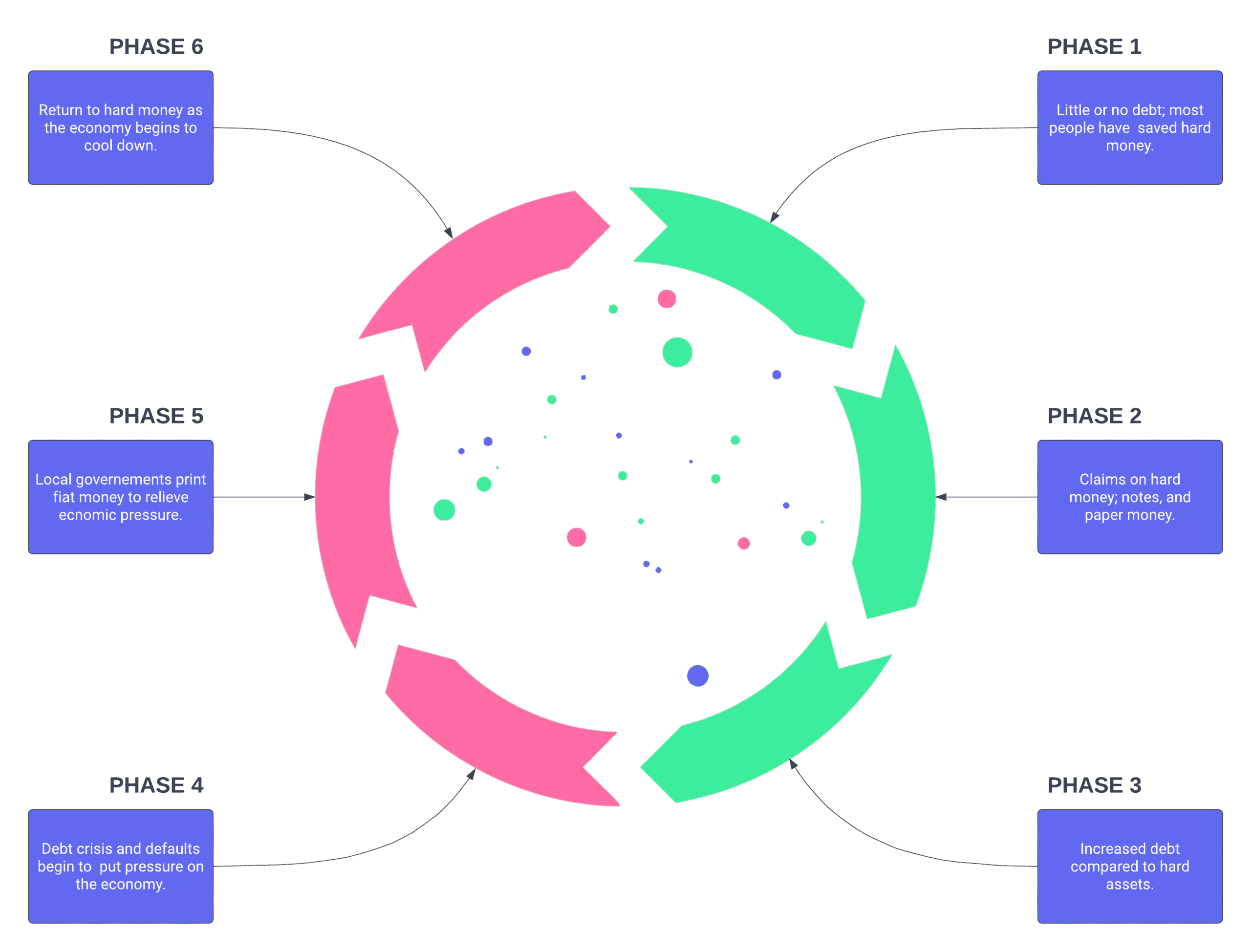

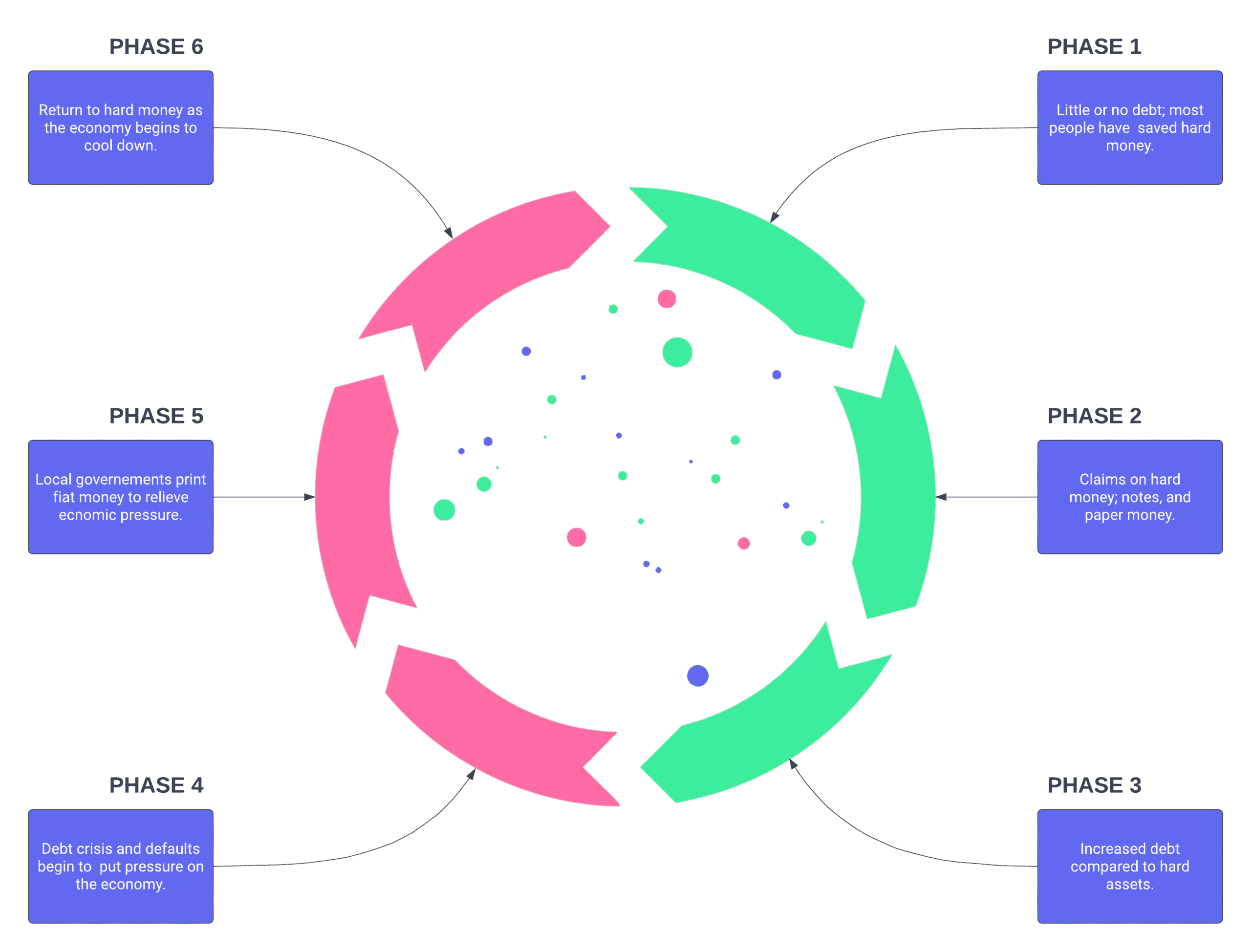

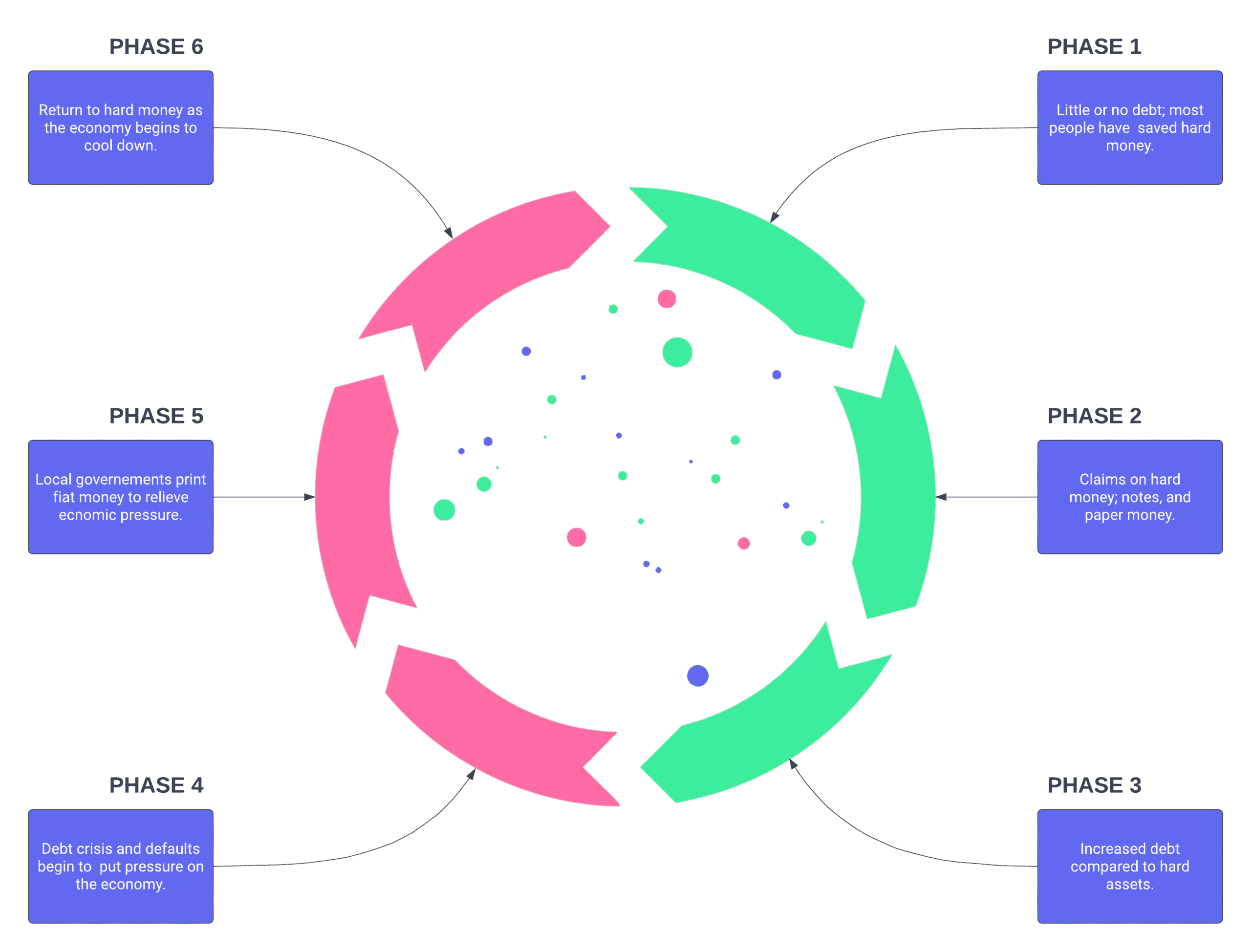

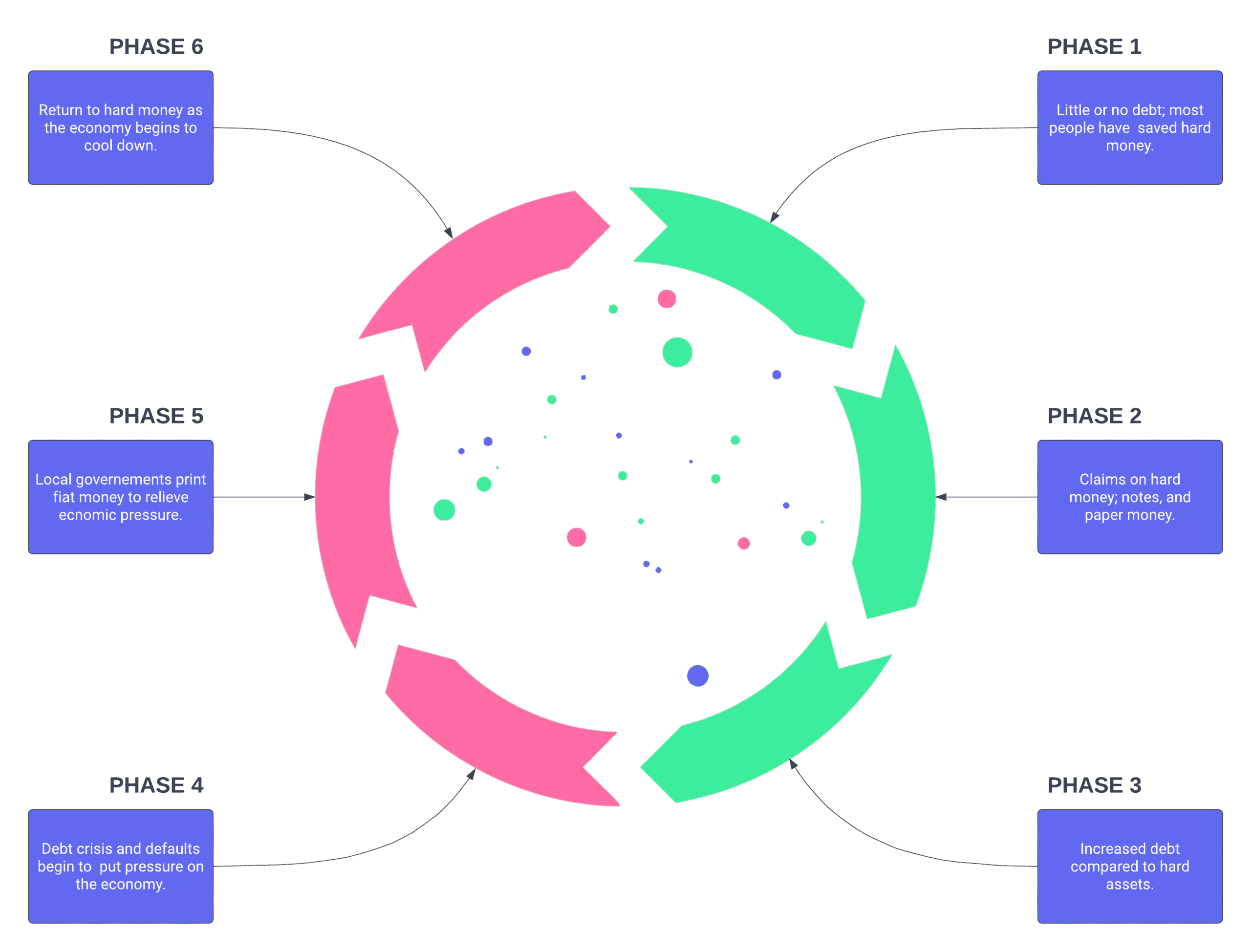

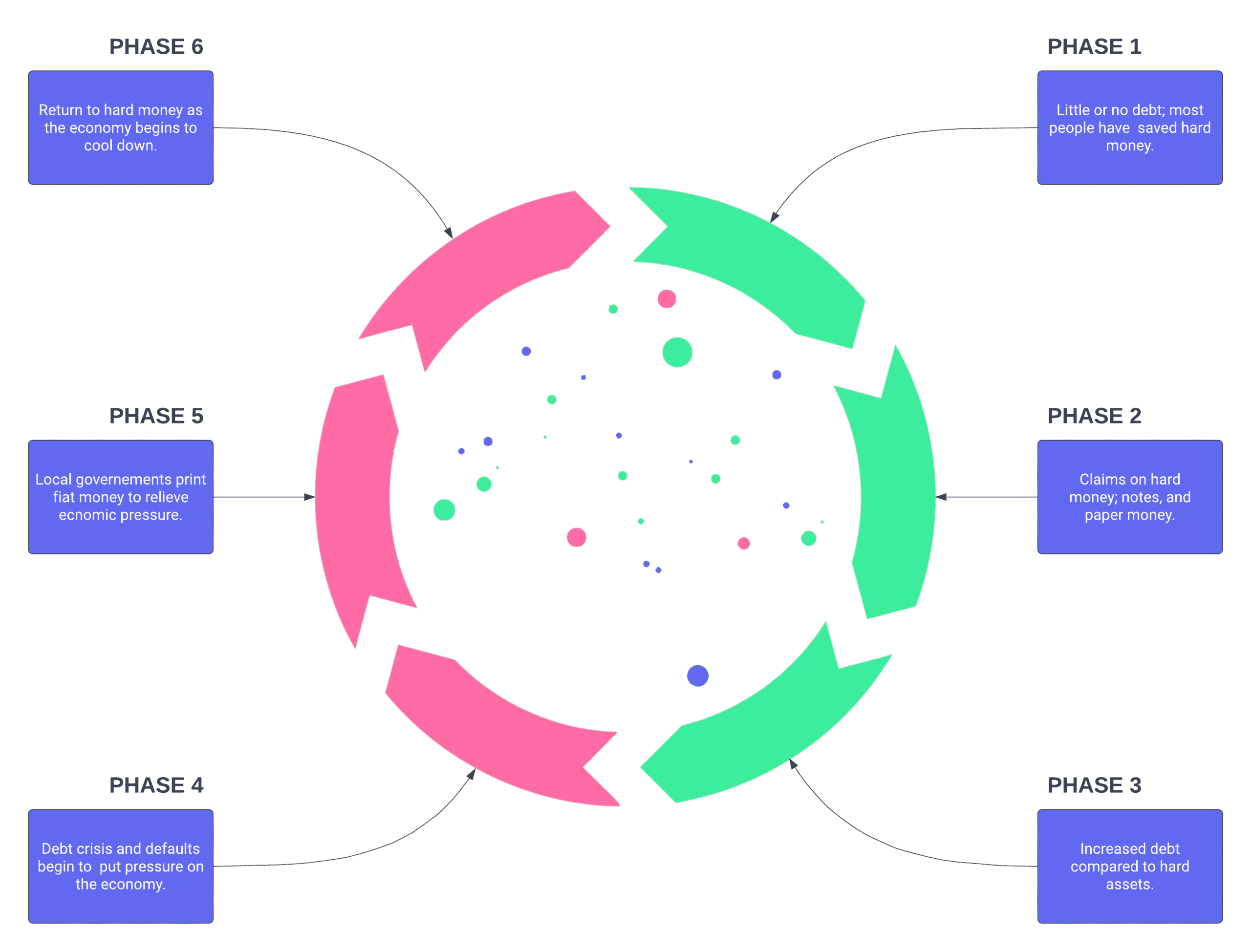

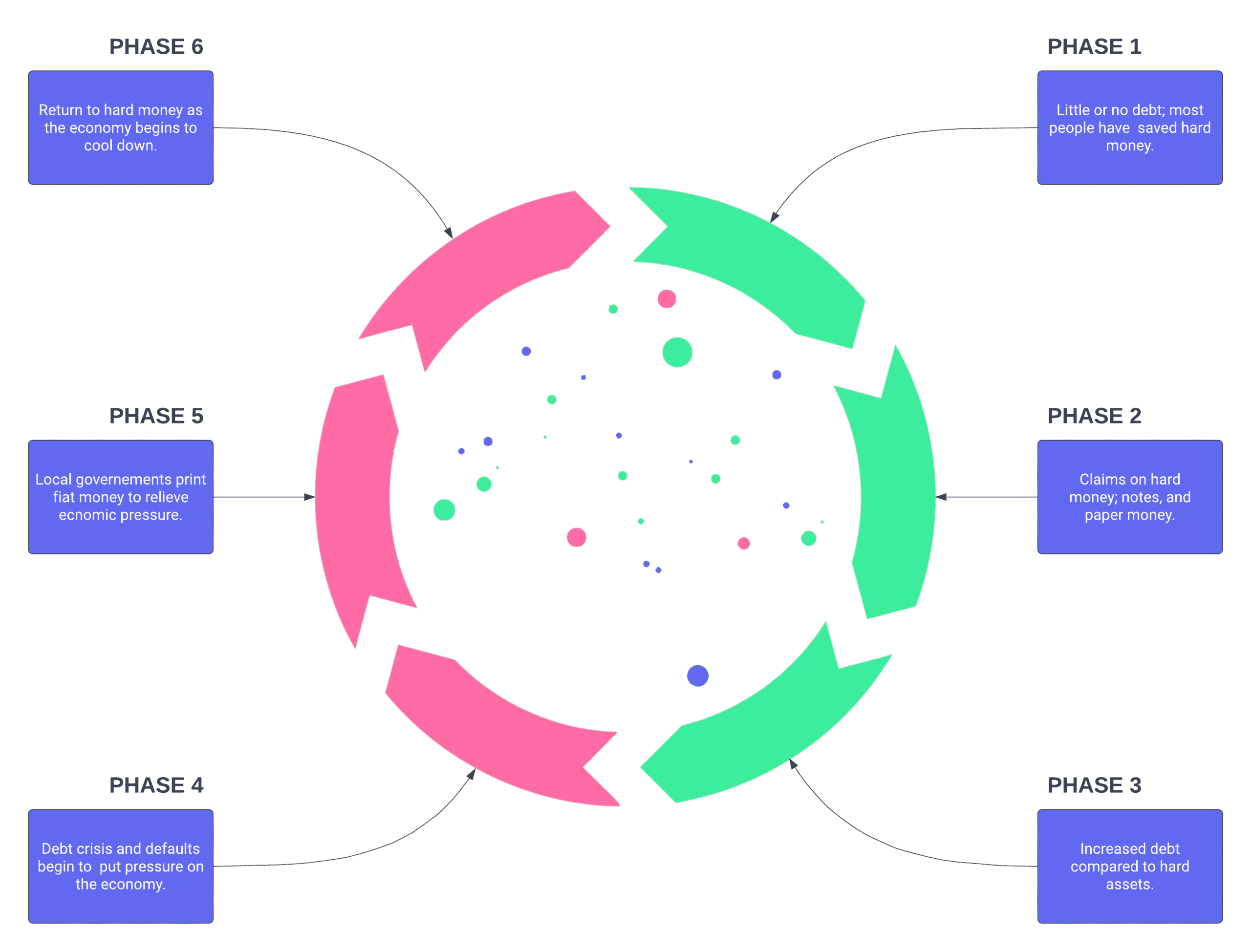

Macroeconomic Effects

Credit scoring plays a vital role in economic growth by helping expand access to credit markets, lowering the price of credit, and reducing delinquencies and defaults. Credit scoring has several benefits for the economy as a whole. First, credit scoring helps families break the generational cycle of low economic status by increasing access to homeownership, which is one of the essential steps in the accumulation of wealth. Second, credit scoring helps promote economic expansion and protect against recession by reducing liquidity restraints.

For consumers, scoring is the key to homeownership and consumer credit. It increases competition among lenders, which drives down prices. Decisions can be made faster and cheaper, and more consumers can be approved. It helps spread risk more fairly, so vital resources, such as insurance and mortgages, are priced more reasonably.

Credit scoring increases access to financial resources, reduces costs, and helps manage risk for businesses, especially small and medium-sized enterprises.

For the national economy, credit scoring helps smooth consumption during cyclical periods of unemployment and reduces the business cycle swings. By enabling loans and credit products to be bundled according to the risk and sold as securitized derivatives, credit scoring connects consumers to secondary capital markets. It increases the amount of capital that is available to be extended or invested in economic growth.

Despite the significant steps made by credit scoring toward increasing consumers' access to credit, national debt burdens have remained relatively constant for the past 20 years at between 11.8% and 14.4%.

Since 1970, the year that saw the enactment of the FCRA, the primary law that enables credit reporting and scoring in the United States, U.S. consumers have enjoyed significantly increased access to credit. This is especially true for traditionally underserved market segments. Access to credit among consumers in the lowest 20% income bracket jumped over 70% between 1970 and 2001.

Credit scoring has made lending decisions faster and more equitable. Even significant lending decisions can now be made in a matter of hours or minutes rather than days or weeks.

Auto Financing and Transportation

A study conducted in 2014 by Standford University found that 84% of automobile loans received a decision within an hour 23% of automobile loans received a decision within 10 minutes.

The majority of retailers open all charge accounts in less than 2 minutes. In addition to speed and convenience, scoring makes credit cheaper, which means lower costs and greater access for consumers.

Credit scoring enables lenders to extend credit to as many as 11,000 extra customers per 100,000 applicants by making the costs of extending credit lower. As a result, more than 90% of U.S. cardholders report being satisfied with their credit opportunities.

With such increased access to credit, one might expect a risk of overextension or moral hazard, particularly in lower-income groups. Due to the increased accuracy and predictiveness of credit scoring, however, this is not the case.

In fact, credit scoring enables lenders to be more proactive in preventing overextension and moral hazards. Because credit scoring gives lenders the ability to evaluate risk constantly and make timely corrections, U.S. delinquency rates are extremely low. In the fourth quarter of 2002, only 3.9% of all mortgage borrowers were 30 days or more delinquent, only 4.6% of all credit card borrowers were 30 days or more delinquent, and 60% of U.S. borrowers had never been delinquent in the previous seven years. Consumers also benefit from increased competition as a result of credit scoring. Credit scores make it possible for lenders to prescreen and qualify applicants cost-effectively, thereby facilitating more efficient competition among lenders. Credit scores enable lenders to predict risk more accurately, which reduces the "premium" a lender needs to charge to cover its potential losses. In conjunction with this and the increased competition, credit scores have dramatically increased consumer choice, and credit card interest rates have plummeted. In 1990, 73% of credit cards had interest rates higher than 18%. In 2002, 71% of credit cards had interest rates of 16.49% or lower, and only 26% were 18% or higher.

Access to Home Ownership

Over the past ten years, credit scoring has also become a prevalent factor in mortgage lending. In 1996, only 25% of mortgage lenders used credit scores as a part of their underwriting procedures.

By 2002, more than 90% of mortgage lenders used credit scoring and automated underwriting technologies.37 Before credit scoring, mortgage underwriting took an average of three weeks. By 2002, credit scoring enabled lenders to underwrite and approve 75% of mortgage applications in less than three minutes.

Additionally, a Fannie Mae survey showed that the use of credit scoring reduces mortgage origination costs by about $1,500 per loan.39 As a result, more equity is available for homeowners who can now extract up to 90% of their homes' value under Fannie Mae's underwriting guidelines, as opposed to just 75% in 1993.

A Tower Group study concluded that U.S. mortgage rates were on average two full percentage points lower than those in Europe because credit scores make it possible to securitize and sell mortgage loans in the secondary market. As a result, this study estimated that U.S. consumers save as much as $120,000,000,000 annually in mortgage interest payments. In total, it is estimated that credit scoring enabled homeowners to extract more than $700,000,000,000 of accumulated equity from their homes, which was then infused back into the national economy.

Economic Benefits

An economy without credit will have a very hard time keeping up with the rapid development of the digital economy. Credit is bad when it finances overconsumption that is not paid back. When used to allocate resources, reduce fraud, and produce income efficiently, it can help create a safer, transparent, inclusive economy for everyone.

With all the benefits credit and credit scoring has done, it suffers from significant complications that cause inconsistencies in the scoring process, making it unreliable for global implementation. Data is extremely heterogeneous, coming in all shapes and sizes created by different cultures, regulatory environments, and technologies. However, modern data networks tend to be centralized homogenous frameworks, processing data the same way every time to get the same outcome or extract value.

Order within society and low corruption is the formula for progress. By developing the bond, equities, and derivatives markets, people will be able to leverage their savings and assets to invest in innovation and share in the success of their economy in the safest way possible.

Despite the widespread use of analytical tools such as machine learning, most credit scores lack features that would extend and enhance their utility across heterogeneous users and applications.

The Value of A Credit Score

Credit is trusted data wrapped in real value. Blockchain, a trust-less system, still needs to be trusted to reach mass adoption. Once blockchain technology is integrated, trust is no longer required.

The credit (bond) market is more important than innovation. Credit is the most correlated asset class. It does not require collateral, enabling it to be created out of thin air and issued immediately, making it highly liquid and volatile. Even though credit can be created overnight, this is not the same as printing money. Cash is a settlement of a transaction providing no data points. Credit is tied to the user and their personal decision. The borrower's reputation is the collateral. If the borrower is a good investment to society, they become credible. If they are a bad investment, their credibility and opportunities suffer.

A decentralized credit protocol is a missing component in the DeFi ecosystem and capital markets bottlenecking global adoption. If there were more safe, consistent, and private incentives to transition countries, corporations, and consumers without taking from one another, they would pave the way overnight. By infusing credit protocols with the blockchain ecosystem forming a decentralized bond market will allow rapid exponential growth protecting incoming investors.

A credit score is the smallest implementation of blockchain technology with the highest economic output for the global economy. It is a risk metric gauging how much credit to safely and consistently allocate credit to users in the most efficient, fair, and inclusive way. Credit scores reached mass adoption on an average of 2-3 years in each industry once integrated. Motivation only lasts so long, meaning effort plays an important role.

By shrinking the loan application process from weeks to minutes, efficiently allocating credit to citizens accelerated the United States real estate market, allowing the average citizen to become a homeowner and extract value back out of their mortgage to invest in the market and partake in the global economy. The amount of effort a user has to exert to be part of a network. The lowest amount of value or effort input with the highest amount of value output.

The difference between invention and innovation is adoption. Adoption requires motivation. Most of the time, this is financial, and other times it's about more important issues surrounding political, economic, or standard of living.

// THE FRESH PROTOCOL

Introduction

The Main Problem To Solve

This would mean pin pointing and addressing the problem with the highest impact on the current industry concerns by providing a solution for the majority of the shared issues restricting access to financial tools and services. What solution requires the lowest input of value and effort while providing the highest output?

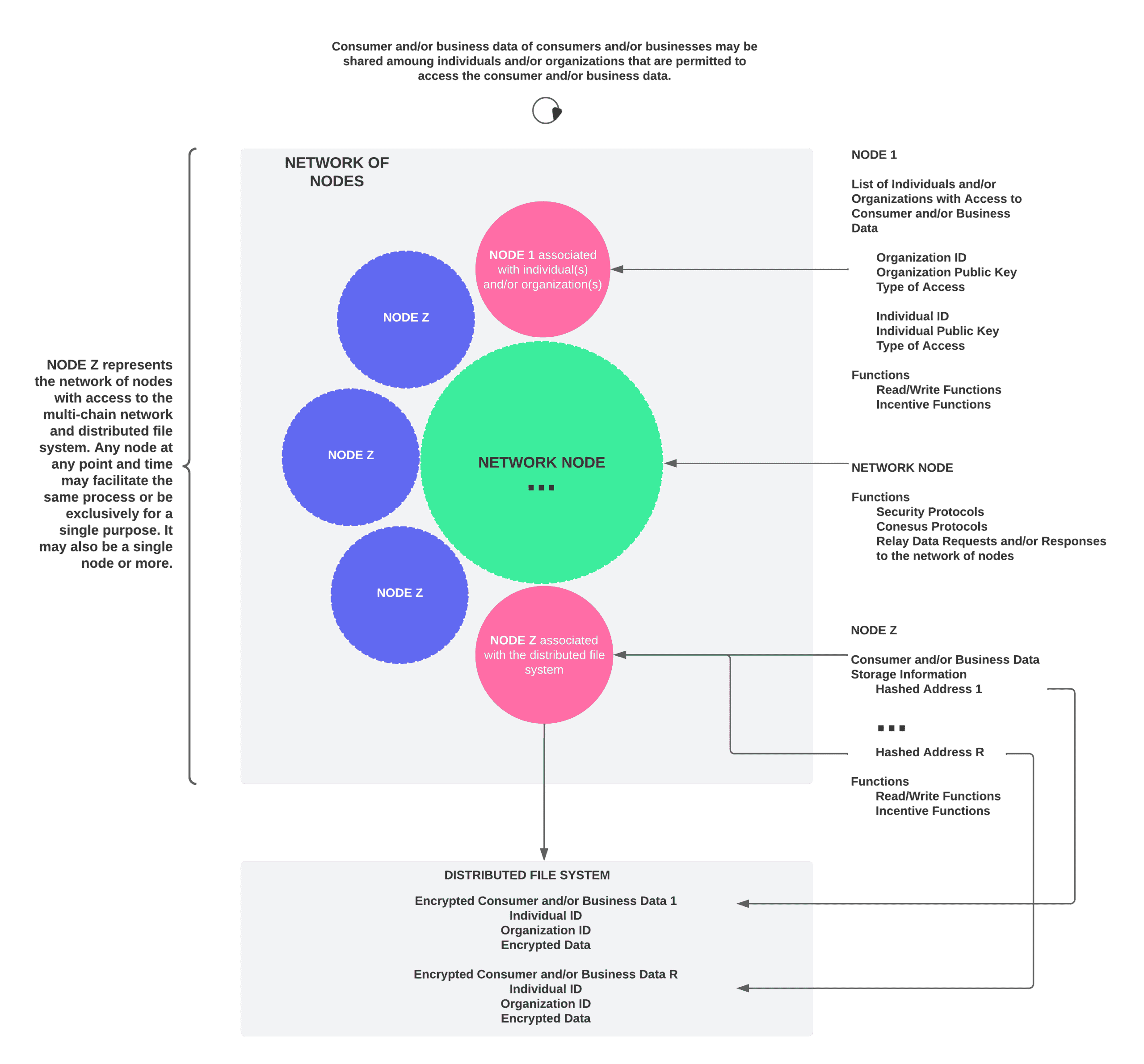

A reporting agency can collect data relating to consumers or businesses, and a group of individuals or organizations can pay the reporting agency to access the data. For example, a credit reporting agency can collect consumer or business data of consumers or businesses, and a group of individuals or organizations (e.g., crowdfunded, financial institutions, etc.) can pay the credit reporting agency to access the consumer or business data. In this case, each individual or organization can independently provide the consumer or business data of the consumers or businesses to the credit reporting agency, thus allowing the credit reporting agency to serve as a singular source of consumer or business data and to charge individuals or organizations to access the consumer or business data.

However, the credit reporting agency can not provide the individuals or organizations with any consideration for initially providing the credit reporting agency with the consumer or business data. Furthermore, providing consumer or business data to credit reporting agencies creates security and/or privacy concerns. For example, a credit reporting agency can resell the consumer or business data of the consumer or business without the knowledge or consent of the consumer or business or the individual or organization providing the consumer or business data of the consumer or business can have a security breach that allows an unauthorized user to obtain the consumer or business data, or the like.

In some cases, a Substrate node can be used to store consumer or business data for consumers or businesses. A Substrate node is a distributed database that maintains a continuously-growing list of records, called blocks, that can be linked together to form a chain. Each block in the Substrate node can contain a timestamp and a link to a previous block or transaction. The blocks can be secured from tampering and revision.

Additionally, a Substrate node can include a secure transaction ledger database shared by parties participating in an established, distributed network of computers. A Substrate node can record a transaction (e.g., an exchange or transfer of information) that occurs in the network, thereby reducing or eliminating the need for trusted/centralized third parties. Further, the Substrate node can correspond to a record of consensus with a cryptographic audit trail that is maintained and validated by a set of independent computers.

However, using the Substrate node to store consumer or business data of consumers or businesses can create scalability issues when large quantities of consumer or business data are considered. Furthermore, without an adequate system for incentivizing individuals or organizations to continue to update the Substrate node with the consumer or business data of consumers or businesses, the Substrate node can not be a reliable source of updated consumer or business data of consumers or businesses.

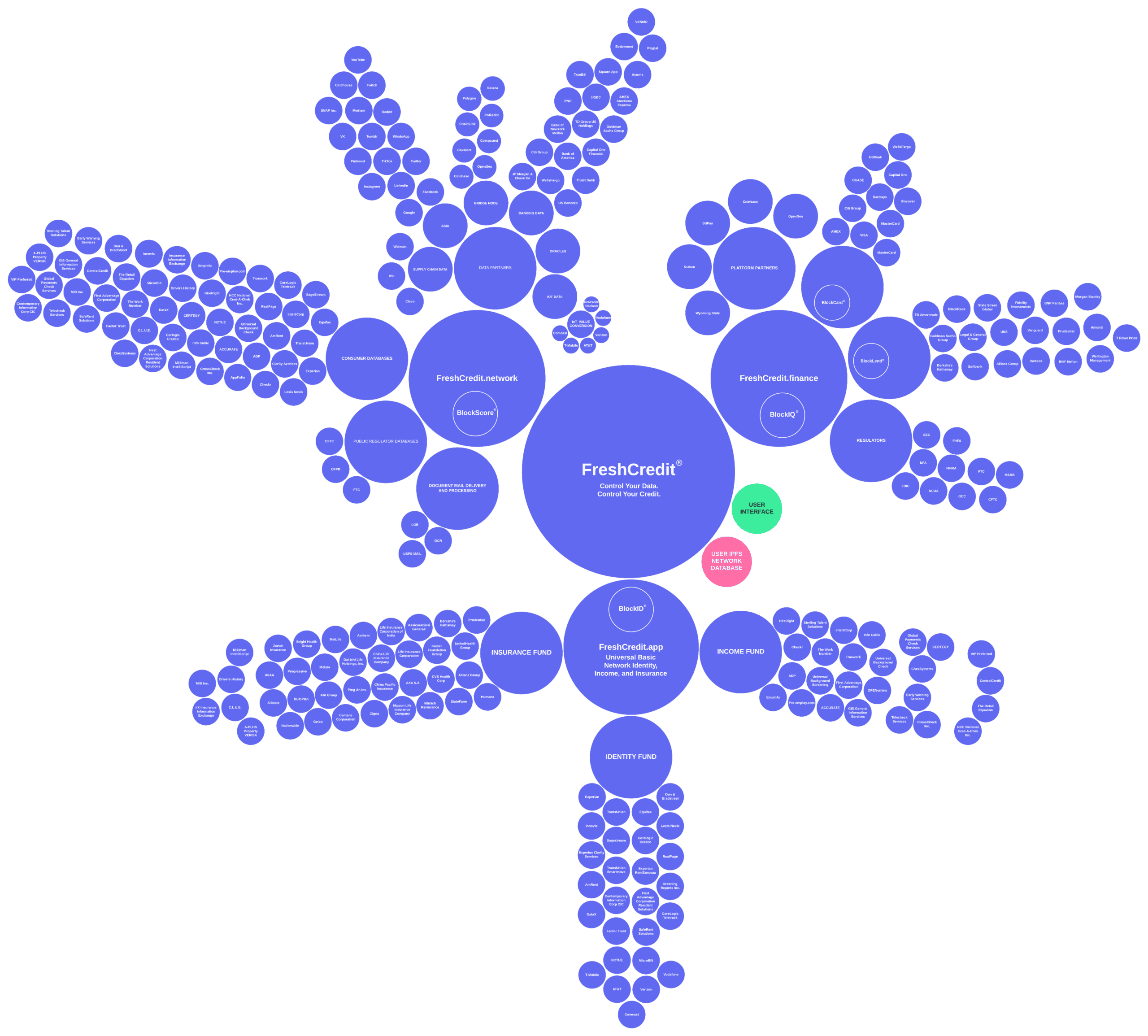

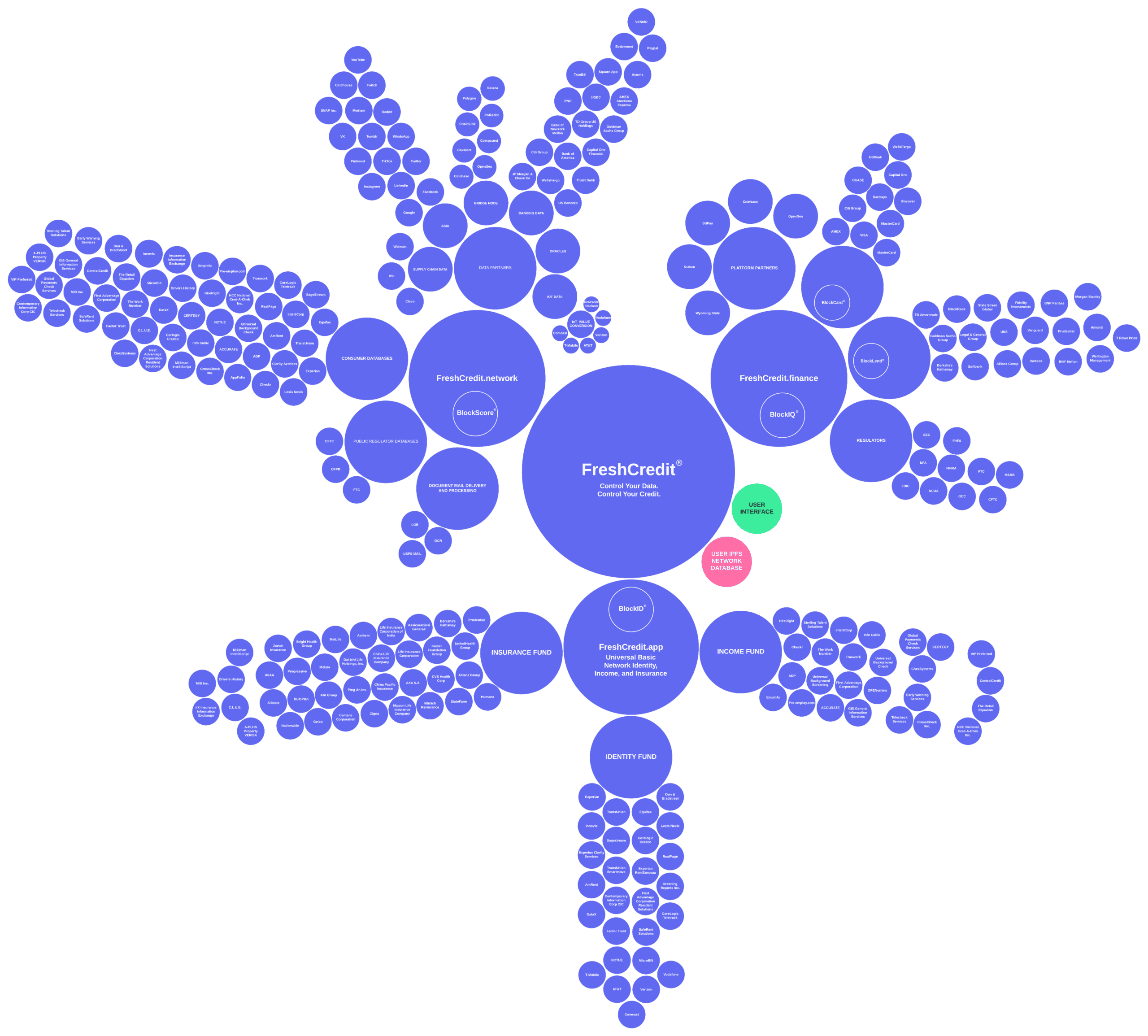

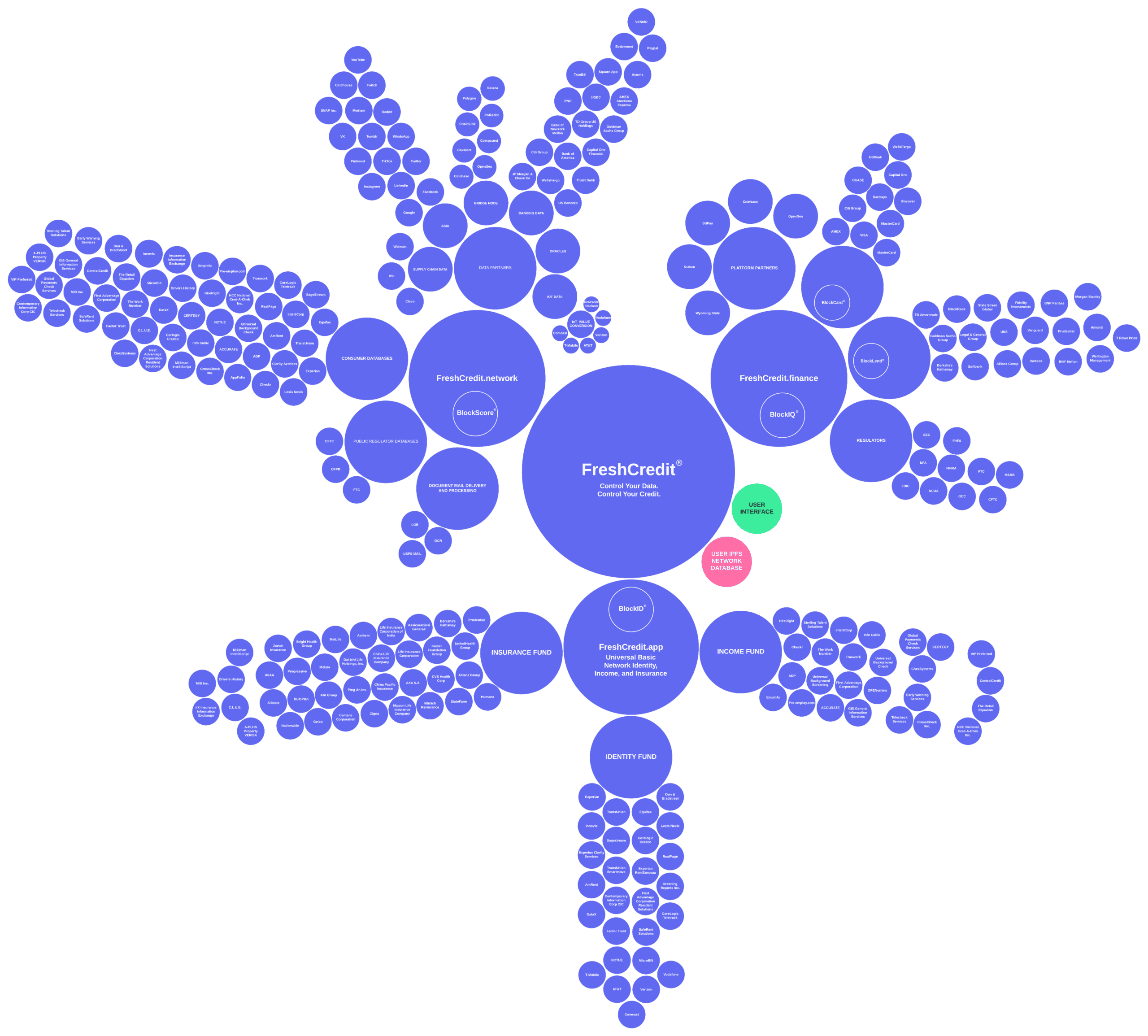

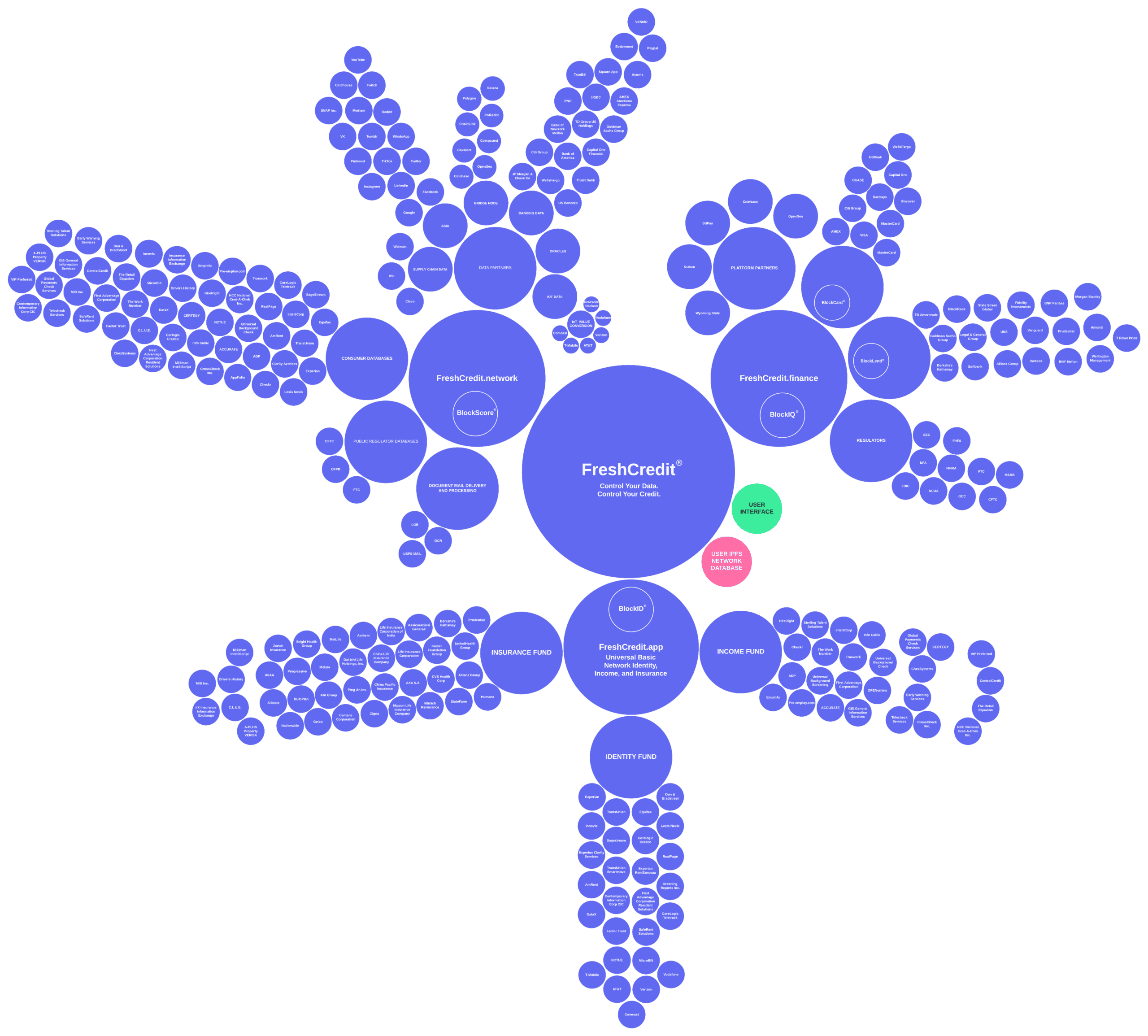

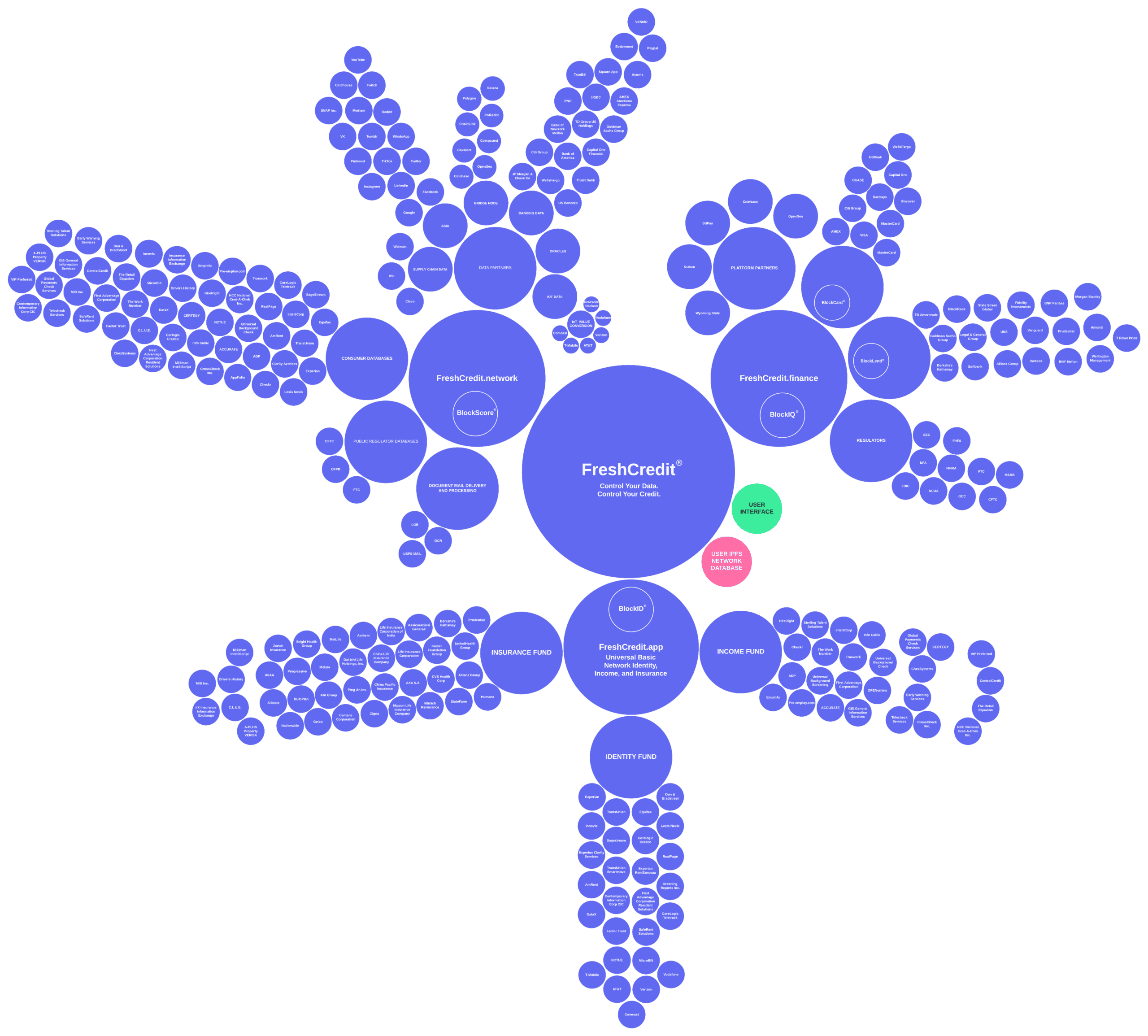

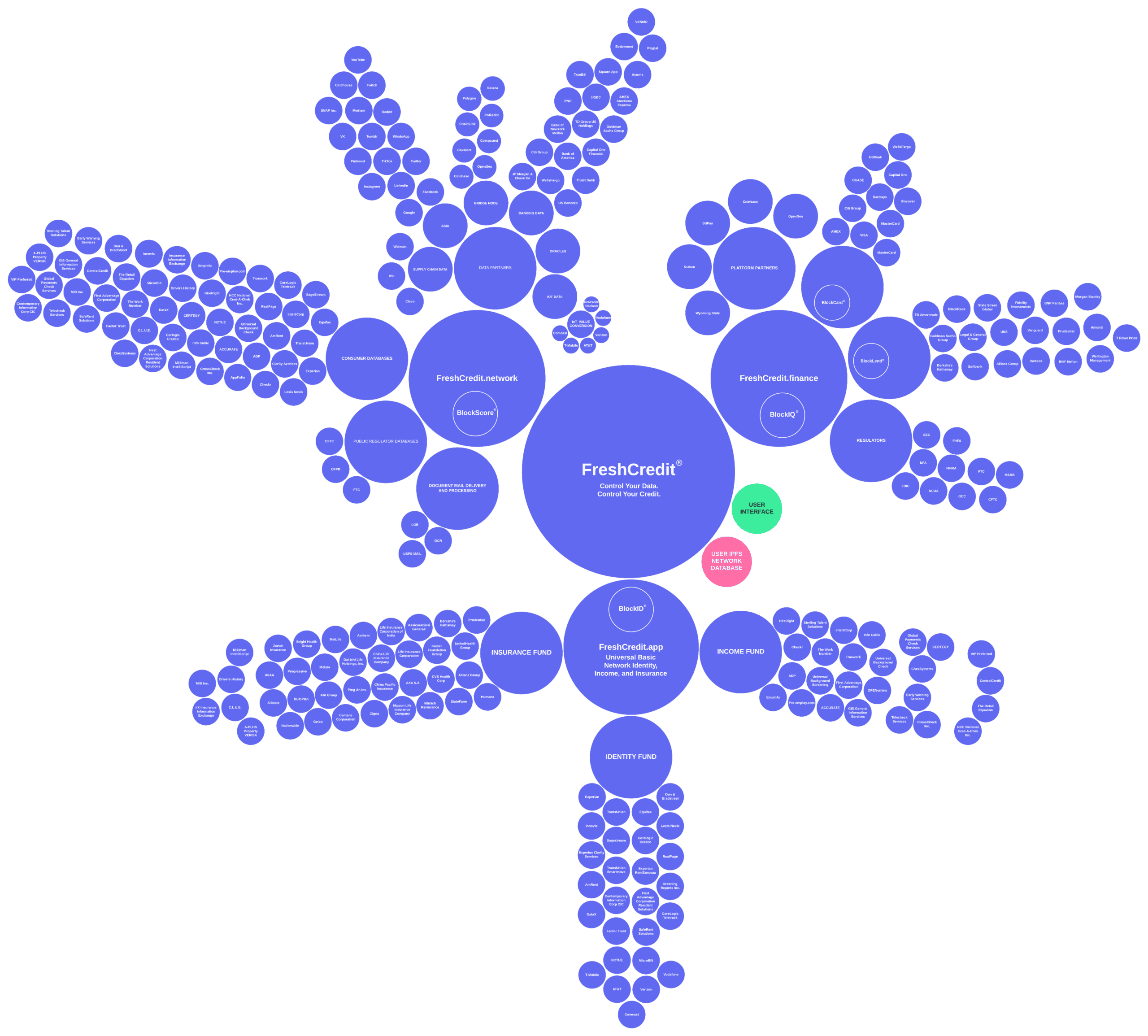

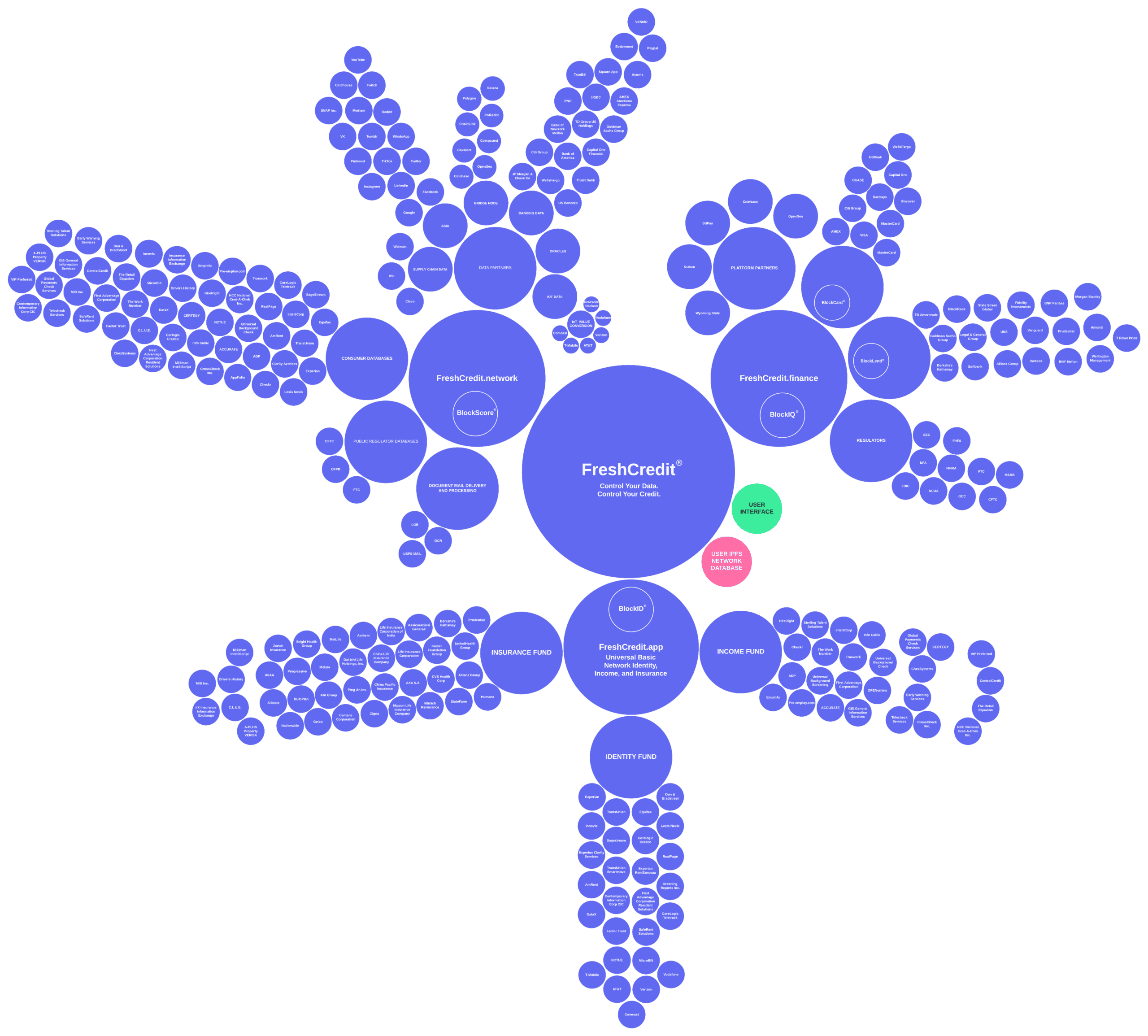

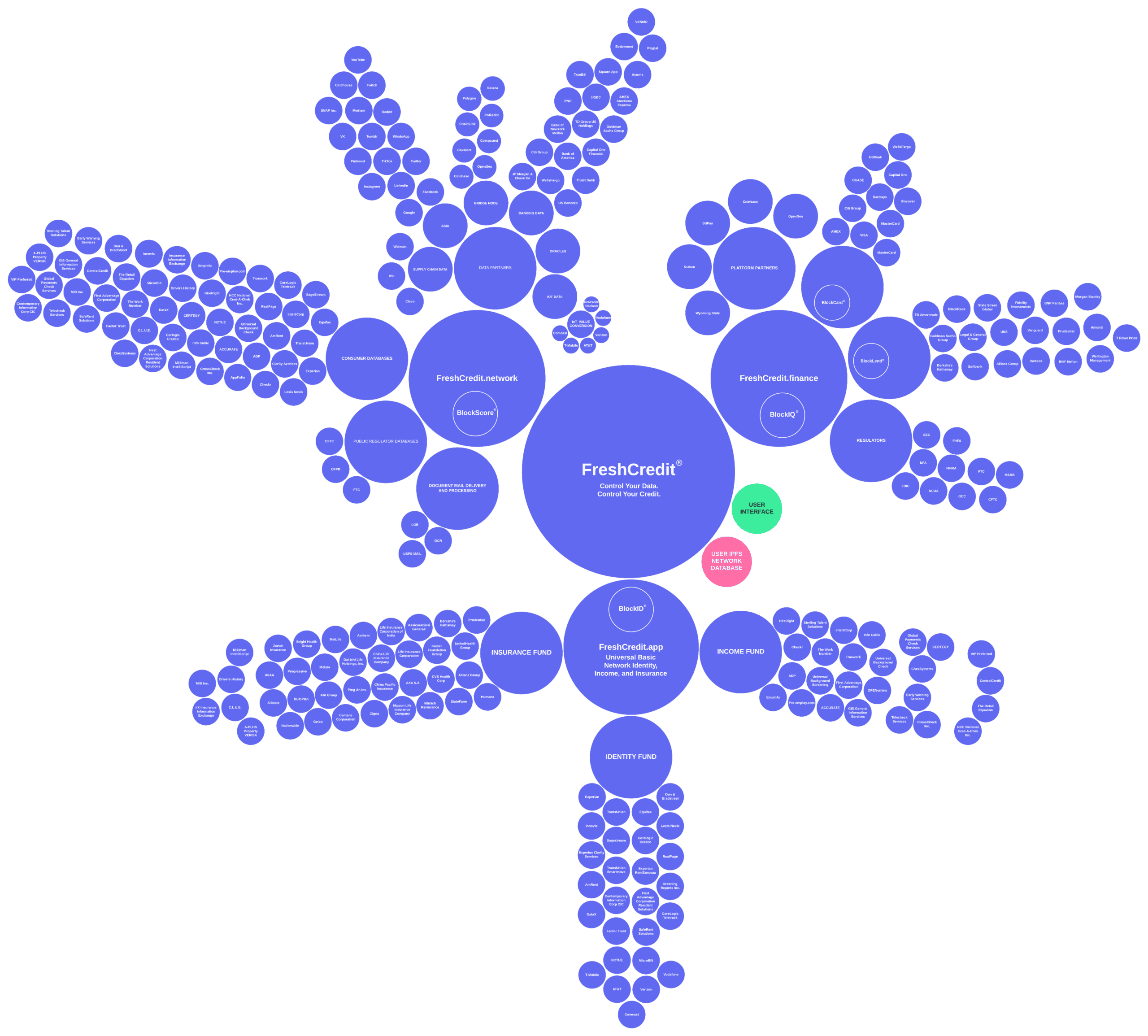

There are three main components to The Fresh Protocol. The protocol improves the current credit scoring and lending systems by implementing composable inclusive scoring models that can serve emerging markets utilizing alternative data while maintaining the highest security and privacy practices blockchain technology provides and simultaneously allowing centralized and decentralized institutions to offer compliant, secure, and fair lending opportunities at a global scale.

BlockID® dNFT

This is the secure global financial identity allowing lenders to provide compliant lending options to consumers and businesses without exposing the personal or sensitive data of the user. The BlockID® NFT is a dNFT (dynamic NFT) that can be updated and altered over time alongside the network and be used to self-collateralize loans. A BlockID® dNFT is the credit reporting registry protocol tracking current and historical debt obligations tied to the user’s BlockID® dNFT.

BlockScore® Protocol

This is the standardized metric of the user’s creditworthiness. It is an unbiased, transparent, and decentralized scoring model similar to VantageScore or FICO while allowing the implementation of alternative data and economic models to more effectively complete the financial identity. This is governed by the non-profit organization, the BlockScore® Network.

BlockIQ® DAO

This is the transparent algorithmic protocol controlling the BlockIQ® DAO(decentralized autonomous organization), a non-custodial liquidity pool that generates value by tokenizing new data generated across the network, improving the BlockScore® Protocol’s overall accuracy affecting the loan interest and allocation of the BlockIQ® DAO. The BlockIQ® DAO allows assets to be staked to the liquidity pool utilizing a user’s BlockID® dNFT.

SECURITY

For example, security is provided by supporting the Substrate node with a tamper-resistant data structure (e.g., the parachain), by implementing various forms of authentication, by restricting access to the network of Substrate nodes to particular individuals or organizations or parties, or the like. To provide a few particular examples, the Substrate node can improve security by preserving an immutable record of the consumer or business data, by using cryptographic links between blocks of the Substrate node (e.g., reducing the potential for unauthorized tampering with the consumer or business data), or the like. Security is further improved as a result of Substrate nodes that have access to the Substrate node independently verifying each transaction that is added to the Substrate node. Moreover, the use of a Substrate node also provides failover protection, in that the first Substrate node can continue to operate in a situation where one or more other Substrate nodes that have access to the Substrate node fail.

INCENTIVE

Furthermore, By incentivizing individuals or organizations to update the IPFS cloud network with new consumer or business data, the consumer or business data can serve as a reliable indicator of the creditworthiness of the consumer or business. Still further, the first Substrate node conserves processing resources or network resources, or memory resources. For example, the first Substrate node conserves processing resources or network resources that might otherwise be used to query a set of credit bureau data sources to obtain consumer or business data of the consumer or business. As another example, by utilizing the IPFS cloud network to store the consumer or business data, the network of Substrate nodes improves in scalability and conserves memory resources that might otherwise be used to attempt to store the consumer or business data on the Substrate node.

Some implementations described herein include a first Substrate node of a network of Substrate nodes that is able to share consumer or business data of a consumer or business by utilizing a Substrate node supported by a Substrate node, a Substrate node associated with the consumer, or business, the IPFS cloud network. Additionally, some implementations described herein can also provide a mechanism for incentivizing individuals or organizations to use the Substrate node and the IPFS cloud network to share the consumer or business data, as described further herein.

// THE FRESH PROTOCOL

Architecture

The BlockID® dNFT – Data Management Protocol DMP

The Fresh Protocol operates via a distributed cloud node network on the Polkadot blockchain utilizing parachain technology and side-chain operators. This allows interoperability to extend to all other blockchain networks in the decentralized ecosystem, such as Ethereum, Cardano, Stellar, and Ripple. The Fresh Protocols nodes use validators maintained on cloud-based infrastructures, such as Microsoft Azure, AWS, and Google Cloud, and this allows the nodes to connect to centralized institutions using off-chain operators.

The parachain’s validators then validate all the data reported by the side-chain operators before being cryptographically stored and analyzed by the composable open-source credit scoring algorithms.

The user’s BlockID® is the key and access point for the user to control and customize data sharing options that give the individual the ability to interact with their BlockID® and the data ledger, storing hashed versions of all the data captured on the network. This allows them to customize the amount and type of data they allow for analysis when they would like to monetize their data utilizing the network. User-specific data earnings are calculated and fractionalized from the total earnings generated on the monetization layer and stored on the earnings ledger before being distributed to each individual’s BlockID® dNFT wallet.

The issues regarding layer one and layer two blockchain technologies offering decentralization solutions are the critical factors in deciding to build the Fresh Protocol utilizing the Substrate blockchain framework. The cross-chain capabilities are able to address issues regarding data heterogeneity and fragmentation.

THE PROCESS

1. A process, comprising: receiving, by a first substrate node, new consumer or business data associated with a consumer or business that has credit with a first individual or organization, wherein a substrate node and the IPFS cloud network are to be used to share the new consumer or business data with a network of substrate nodes that are associated with a group of individuals or organizations that is permitted to access the new consumer or business data, and wherein the new consumer or business data has been provided to the IPFS cloud network by a second substrate node associated with a second individual or organization of the group of individuals or organizations; updating, by the first substrate node, a substrate node associated with the consumer or business to include information associated with the new consumer or business data; and performing, by the first substrate node, a group of actions associated with obtaining additional new consumer or business data from the IPFS cloud network or providing the additional new consumer or business data to the IPFS cloud network.

2. The process of step 1, further comprising: receiving, from a device associated with the first individual or organization, a request for the new consumer or business data; obtaining, by the first substrate node, the information associated with the new consumer or business data from the substrate node; obtaining, by the first substrate node, the new consumer or business data by using a storage identifier to search the IPFS cloud network; and providing, by the first substrate node, the new consumer or business data to the device associated with the first individual or organization.

3. The process of step 1, wherein the first individual or organization is permitted to use the network of substrate nodes to access the new consumer or business data if an individual or organization identifier for the first individual or organization is stored by a data structure associated with the substrate node, and wherein the individual or organization identifier for the first individual or organization is added to the data structure.

4. The process of step 1, further comprising: generating, after receiving the new consumer or business data, a storage identifier for the new consumer or business data by using a content addressing technique to generate a cryptographic hash value identifying a storage location at which the new consumer or business data is to be stored within the IPFS cloud network, wherein the cryptographic hash value is to be used as the storage identifier for the new consumer or business data.

5. The process of step 1, wherein the substrate node is used for the consumer or business and includes at least one of: data identifying the group of individuals or organizations that are permitted to access the new consumer or business data, a set of storage identifiers identifying a set of storage locations associated with historical consumer or business data of the consumer or business, a first function associated with providing the new consumer or business data to the IPFS cloud network, or a second function associated with obtaining the new consumer or business data from the IPFS cloud network.

6. The process of step 1, wherein the first individual or organization and the second individual or organization are lending to consumers or businesses.

7. A first substrate node, comprising: one or more memories; and one or more processors communicatively coupled to the one or more memories, configured to: receive new consumer or business data associated with a consumer or business that has credit with a first individual or organization, wherein a substrate node and the IPFS cloud network are to be used to share the new consumer or business data with a network of substrate nodes that are associated with a group of individuals or organizations that is permitted to access the new consumer or business data; provide a storage identifier for the new consumer or business data to a substrate node associated with the consumer or business, wherein the substrate node is supported by the substrate node, and wherein the storage identifier is used to identify a storage location at which the new consumer or business data is to be stored within the IPFS cloud network; and perform a group of actions associated with obtaining additional new consumer or business data from the IPFS cloud network or providing the additional new consumer or business data to the IPFS cloud network.

8. The first substrate node of step 7, wherein the one or more processors are further configured to: generate, using a content addressing technique, a cryptographic hash value identifying the storage location at which the new consumer or business data is to be stored within the IPFS cloud network, wherein the cryptographic hash value is to be used as the storage identifier for the new consumer or business data.

9. The first substrate node of step 8, wherein the one or more processors, when performing the group of actions, are to: provide the additional new consumer or business data to the IPFS cloud network to cause substrate nodes associated with other individuals or organizations to obtain the additional new consumer or business data.

10. The first substrate node of step 7, wherein the first individual or organization is permitted to use the network of substrate nodes to access the new consumer or business data if an individual or organization identifier for the first individual or organization is stored by a data structure associated with the substrate node, and wherein the individual or organization identifier for the first individual or organization is added to the data structure.

11. The first substrate node of step 7, wherein the one or more processors, when performing the group of actions, are to: obtain the additional new consumer or business data from the IPFS cloud network, wherein another substrate node in the network of substrate nodes provided the additional new consumer or business data to the IPFS cloud network, wherein the other substrate node is associated with a particular individual or organization.

12. The first substrate node of step 7, wherein the substrate node is used for the consumer or business, and includes at least one of: data identifying the group of individuals or organizations that are permitted to access the new consumer or business data, a set of storage identifiers identifying a set of storage locations associated with historical consumer or business data of the consumer or business, a first function associated with providing the new consumer or business data to the IPFS cloud network.

13. The first substrate node of step 7, wherein the one or more processors are further to: broadcast the storage identifier for the new consumer or business data to the network of substrate nodes to cause a second substrate node, of the network of substrate nodes, to provide the storage identifier for the new consumer or business data to a substrate node that is accessible to the second substrate node, wherein broadcasting the storage identifier permits, based on a request from a device associated with a second individual or organization, the second substrate node to obtain the new consumer or business data, obtain the storage identifier for the new consumer or business data from the substrate node, use the storage identifier to obtain the new consumer or business data from

the IPFS cloud network, provide the new consumer or business data to the device associated with the second individual or organization.

14. A non-transitory computer-readable medium storing instructions, the instructions comprising: one or more instructions that, when executed by one or more processors, cause the one or more processors of a first substrate node to: receive new consumer or business data associated with a consumer or business that has credit with a first individual or organization, wherein a substrate node and the IPFS cloud network are to be used to share the new consumer or business data with a network of substrate nodes that are associated with a group of individuals or organizations that is permitted to access the new consumer or business data; provide a storage identifier for the new consumer or business data to a substrate node associated with the consumer or business; broadcast the storage identifier for the new consumer or business data to the network of substrate nodes to cause a second substrate node, of the network of substrate nodes, to provide the storage identifier for the new consumer or business data to a substrate node that is accessible to the second substrate node; and perform a group of actions associated with obtaining additional new consumer or business data from the IPFS cloud network or providing the additional new consumer or business data to the IPFS cloud network.

15. The non-transitory computer-readable medium of step 14, wherein the one or more instructions, when executed by the one or more processors, further cause the one or more processors to: receive, from a device associated with the first individual or organization, a request for the new consumer or business data; obtain information associated with the new consumer or business data from the substrate node; obtain the new consumer or business data by using the storage identifier to search the IPFS cloud network; and provide the new consumer or business data to the device associated with the first individual or organization.

16. The non-transitory computer-readable medium of step 15, wherein broadcasting the storage identifier permits, based on a request from a device associated with a second individual or organization, the second substrate node to obtain the new consumer or business data, obtain the storage identifier for the new consumer or business data from the substrate node, use the storage identifier to obtain the new consumer or business data from the IPFS cloud network, provide the new consumer or business data to the device associated with the second individual or organization.

17. The non-transitory computer-readable medium of step 14, wherein the first individual or organization is permitted to use the network of substrate nodes to access the new consumer or business data if an individual or organization identifier for the first individual or organization is stored by a data structure associated with the substrate node; and wherein the individual or organization identifier for the first individual or organization is added to the data structure.

18. The non-transitory computer-readable medium of step 14, wherein the new consumer or business data received from the IPFS cloud network has been encrypted using a first key associated with the consumer or business; and wherein the one or more instructions, when executed by the one or more processors, further cause the one or more processors to: decrypt the new consumer or business data using a second key associated with the consumer or business; encrypt the new consumer or business data using a first key associated with the first individual or organization; and provide the new consumer or business data to a device associated with the first individual or organization, wherein the new consumer or business data that has been encrypted using the first key associated with the first individual or organization to permit the device associated with the first individual or organization to decrypt the new consumer or business data using a second key associated with the first individual or organization.

19. The non-transitory computer-readable medium of step 14, wherein the substrate node is used for the consumer or business, and includes at least one of: data identifying the group of individuals or organizations that are permitted to access the new consumer or business data, a set of storage identifiers identifying a set of storage locations associated with historical consumer or business data of the consumer or business, a first function associated with providing the new consumer or business data to the IPFS cloud network, or a second function associated with obtaining the new consumer or business data from the IPFS cloud network.

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.

The challenge with enterprise data is highly distributed across a vast expanse of variable technologies and data platforms. Digital retail, telecommunications, and social media data are structured similarly. At the same time, enterprise data is in mainframes, ETL tools, virtualization layers, relational databases, BI databases, Merkle tree, cloud networks, transaction databases, and hundreds of other components that have evolved in the last few decades. Coupled with the fact that every application has a different data model, which makes accessing this value with data tools is undermined by the complexity of integrating the data with the associated tech platforms.

This is why most business problems faced are related to distributed data problems with data, value, and analysis hugely distributed across heterogeneous locations, technologies, and individual sources. Nevertheless, we insist on solving this growing distribution problem with the same centralized models of the past. These models work when the data is already in a standard format – something we can see with the new unicorns of social media and digital retailing. We are starting to also see the effects of FinTech and DeFi banking, but not much in the way of insurance, healthcare, and a vast number of other business problem areas.

Realizing the value of data at an enterprise level requires an intricate data integration and normalization of project data across a massively heterogeneous landscape. Without changes to that data mechanism and increasing distribution of the organizational components, the poorer the return or outcome of the related project. As the data grows in complexity, distribution, and dispersion inside and external to the enterprise, it does not justify the considerable system and data integration projects requirements as a prerequisite to data’s value. Doug Laney from Gartner recently pointed out that through 2017 90 percent of Big Data projects will not be leverageable because they will continue to be in siloes of technology or location.

“Data is the new oil.”

The comparison, at the level it is usually made, is surface level. Data is the ultimate renewable resource. Any existing data reserve has not been waiting beneath the surface; data is generated in vast amounts every day. Creating value from data is much more a cultivation process where the data becomes more valuable with nurture and care over time rather than one of extraction or refinement.

Perhaps the “data as oil” idea can stimulate some much-needed critical thinking. The human experience with oil has been volatile; fortunes made, bloody conflicts, and a terrifying climate crisis. As we make the first steps into economic policy that will be as transformative and risky as that of the oil industry, foresight is essential. Just like the oil, we have seen “data spills.” Large amounts of personal data are publically leaked. What are the long-term effects of “data pollution”?

One of the places where we will have to tread most carefully — another place where our data/oil model can be helpful — is in the realm of personal data. Trillions of dollars are being made right now in the world of information through human-generated data. Cookies track our browsing habits; social apps see our conversations with friends; fitness trackers see our every movement and location — all of these things are being monetized. This is deeply personal data, though it is often not treated as such. A similar comparison to fossil fuels in a way: where oil is composed of the compressed organic material, this personal data is made from the compressed fragments of our personal lives, where we can then extract our human experience.

The value of data begins to decline as data homogeneity declines and the resulting cost of extracting insight increases. The components of the solution are not strictly homogenous.

Re-framing data into a human context is critical. There are multiple things we can do to make data more human and, in doing so, generate much more value now and in the long term.

- BigData vs. BigOil – The Economist*

DYNAMIC NFT VS. STATIC NFT

DYNAMIC NFT VS. STATIC NFT

// THE FRESH PROTOCOL

A Financial Passport

Why use an NFT? – Control and Privacy

The BlockID® dNFT is the user’s interface allowing individuals and organizations to interact with and leverage their data. By connecting to the network, users gain instant access to a cryptocurrency wallet, allowing them to leverage the BlockIQ® DAO to control and monetize their most valuable asset, data. When users generate a new cryptocurrency wallet, they create a non-transferable unique dNFT (dynamic NFT) called a BlockID® dNFT. Using the BlockID® dNFT, the user decides which permission-based data the network can use to improve the scoring algorithms. As data is continually added to the network, the individual generates more tokens that serve as receipts for the provided data.

- The Data Revolution – Stanford, NBER*

- NFT Projected Growth 185% $485B by 2026, CAGR*

Individuals cannot securely control and monetize personal data because of ongoing network hacks, leading to fraud and reporting errors. Large organizations collect and harvest data making them targets for hackers. Additionally, because each organization stores the data they collect, their decisions are based on fragmented and incomplete data, leading to mistakes and compounding losses. In a typical day, we produce 2.5 quintillion bytes of data. There are multiple data quality issues in the current data collection landscape, such as incomplete data, unverified data, inaccurate data, and niche-only data. With this framework, much of the data is sourced from a complex network of first, second, and third-party data sources.